When researchers created an account for a child under 13 on Roblox, they expected heavy guardrails. Instead, they found that the platform’s search features still allowed kids to discover communities linked to fraud and other illicit activity.

The discoveries spotlight the question that lawmakers around the world are circling: how do you keep kids safe online?

Australia has already acted, while the UK, France, and Canada are actively debating tighter rules around children’s use of social media. This month US Senator Ted Cruz reintroduced a bill to do it while also chairing a Congressional hearing about online kid safety.

Lawmakers have said these efforts are to keep kids safe online. But as the regulatory tide rises, we wanted to understand what digital safety for children actually looks like in practice.

So, we asked a specialist research team to explore how well a dozen mainstream tech providers are protecting children aged under 13 online.

We found that most services work well when kids use the accounts and settings designed for them. But when children are curious, use the wrong account type, or step outside those boundaries, things can go sideways quickly.

Over several weeks in December, the research team explored how platforms from Discord to YouTube handled children’s online use. They relied on standard user behavior rather than exploits or technical tricks to reflect what a child could realistically encounter.

The researchers focused on how platforms catered to kids through specific account types, how age restrictions were enforced in practice, and whether sensitive content was discoverable through normal browsing or search.

What emerged was a consistent pattern: curious kids who poke around a little, or who end up using the wrong account type, can run into inappropriate content with surprisingly little effort.

A detailed breakdown of the platforms tested, account types used, and where sensitive content was discovered appears in the research scope and methodology section at the end of this article.

When kids’ accounts are opt-in

One thing the team tried was to simply access the generic public version of a site rather than the kid-protected area.

This was a particular problem with YouTube. The company runs a kid-specific service called YouTube Kids, which the researchers said is effectively sanitized of inappropriate content (it sounds like things have changed since 2022).

The issue is that YouTube’s regular public site isn’t sanitized, and even though the company says you must be at least 13 to use the service unless ‘enabled’ by a parent, in reality anyone can access it. From the report:

“Some of the content will require signing in (for age verification) prior the viewing, but the minor can access the streaming service as a ‘Guest’ user without logging in, bypassing any filtering that would otherwise apply to a registered child account.”

That opens up a range of inappropriate material, from “how-to” fraud channels through to scenes of semi-nudity and sexually suggestive material, the researchers said. Horrifically, they even found scenes of human execution on the public site. The researchers concluded:

“The absence of a registration barrier on the public platform renders the ‘YouTube Kids’ protection opt-in rather than mandatory.”

When adult accounts are easy to fake

Another worry is that even when accounts are age-gated, enterprising minors can easily get around them. While most platforms require users to be 13+, a self-declaration is often enough. All that remains is for the child to register an email address with a service that doesn’t require age verification.

This “double blind” vulnerability is a big problem. Kids are good at creating accounts. The tech industry has taught them to be, because they need them for most things they touch online, from streaming to school.

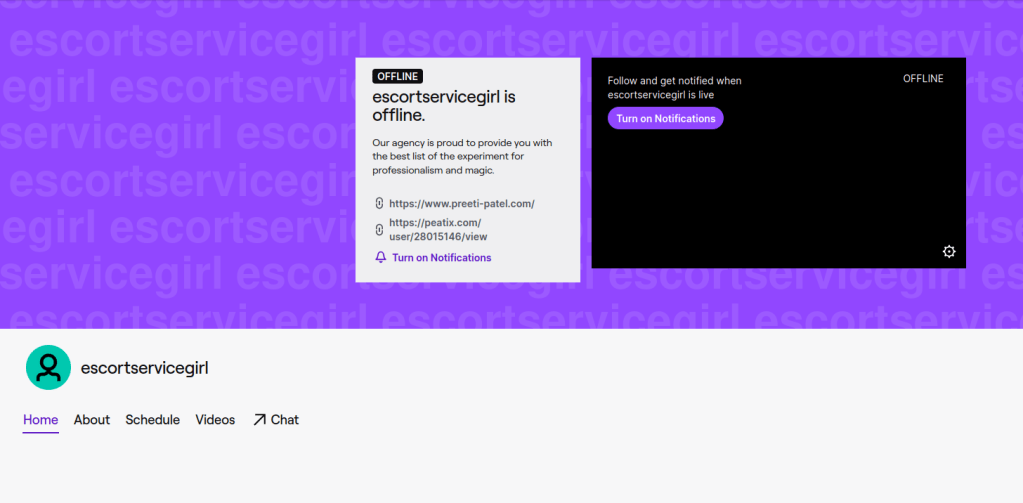

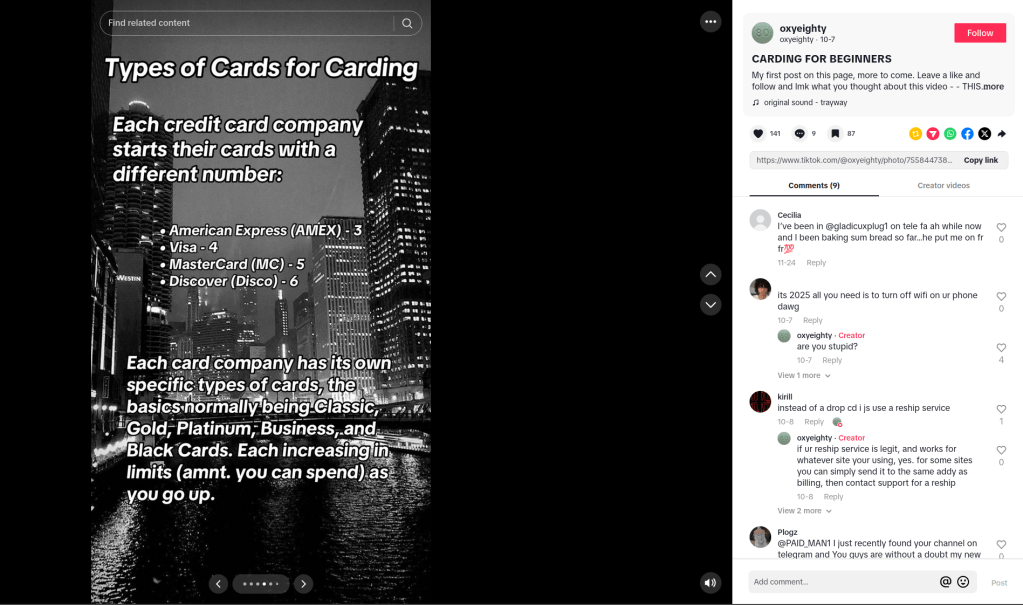

When they do get past the age gates, curious kids can quickly get to inappropriate material. Researchers found unmoderated nudity and explicit material on the social network Discord, along with TikTok content providing credit card fraud and identity theft tutorials. A little searching on the streaming site Twitch surfaced ads for escort services.

This points to a trade-off between privacy and age verification. While stricter age verification could close some of these gaps, it requires collecting more personal data, including IDs or biometric information. That creates privacy risks of its own, especially for children. That’s why most platforms rely on self-declared age, but the research shows how easily that can be bypassed.

When kids’ accounts let toxic content through

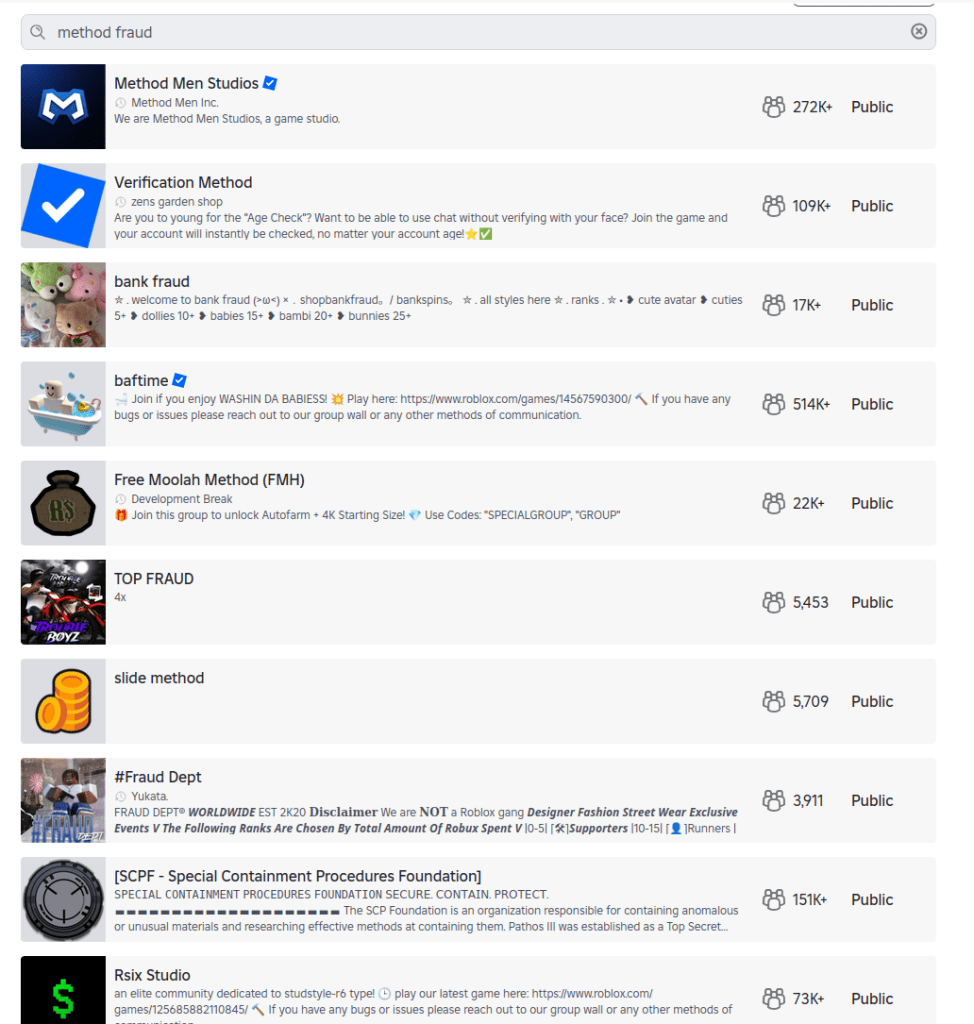

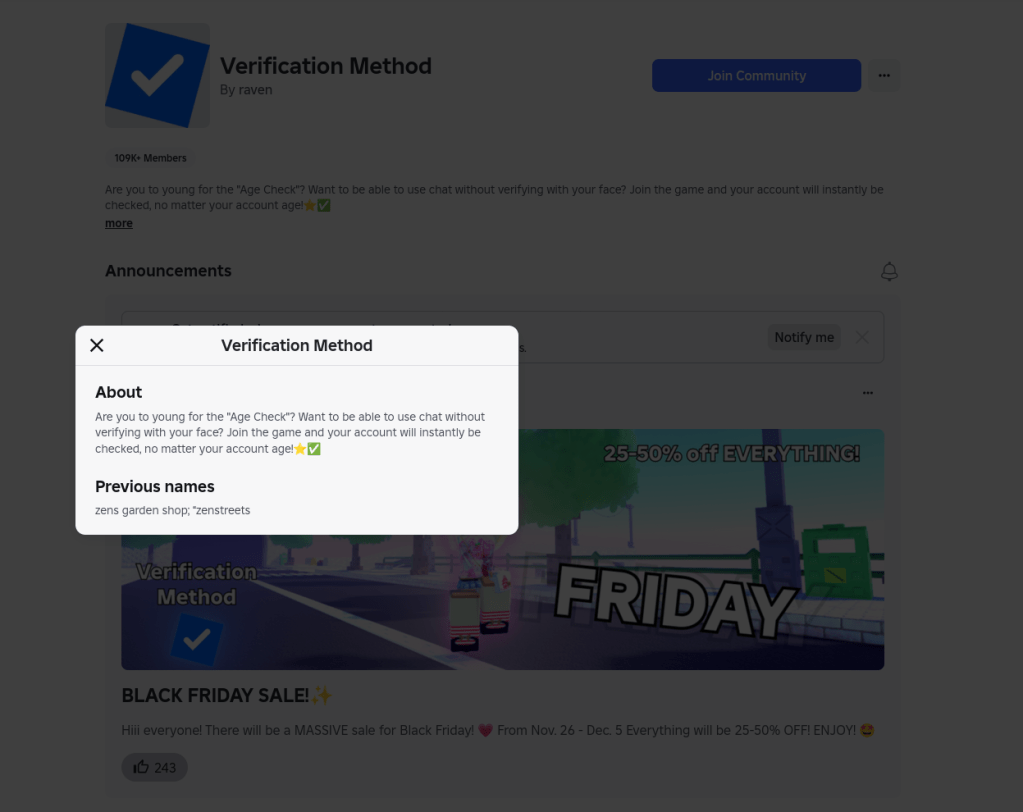

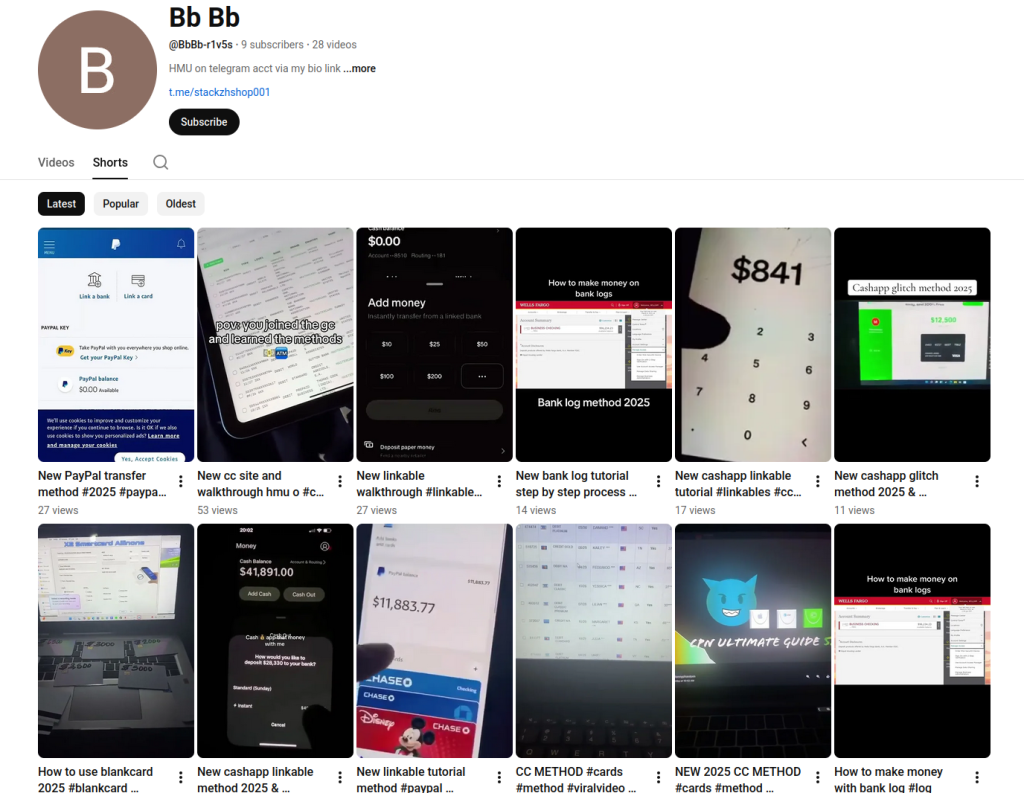

Cracks in the moderation foundations allow risky content: Roblox, the website and app where users build their own content, filters chats for child accounts. However, it also features “Communities,” which are groups designed for socializing and discovery.

These groups are easily searchable, and some use names and terminology commonly linked to criminal activities, including fraud and identity theft. One, called “Fullz,” uses a term widely understood to refer to stolen personal information, and “new clothes” is often used to refer to a new batch of stolen payment card data. The visible community may serve as a gateway, while the actual coordination of illicit activity or data trading occurs via “inner chatter” between the community members.

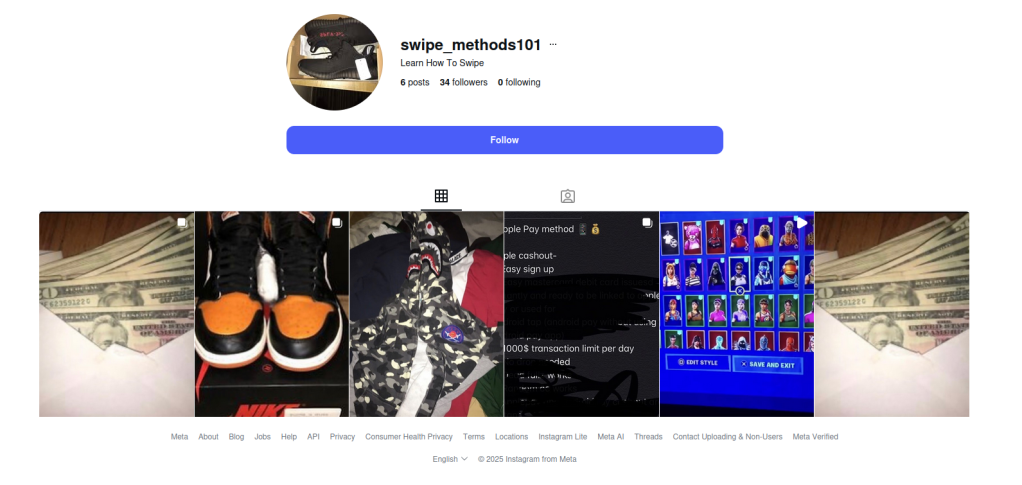

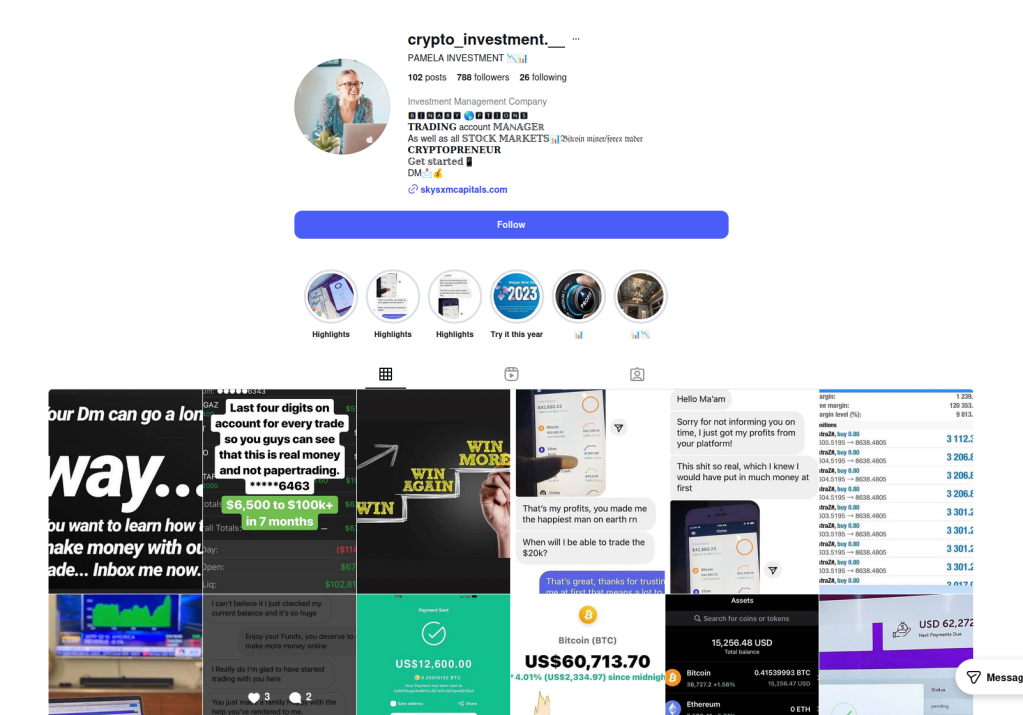

This kind of search wasn’t just an issue for Roblox, warned the team. It found Instagram profiles promoting financial fraud and crypto schemes, even from a restricted teen account.

Some sites passed the team’s tests admirably, though. The researchers simulated underage users who’d bypassed age verification, but were unable to find any harmful content on Minecraft, Snapchat, Spotify, or Fortnite. Fortnite’s approach is especially strict, disabling chat and purchases on accounts for kids under 13 until a parent verifies via email. It also uses additional verification steps using a Social Security number or credit card. Kids can still play, but they’re muted.

What parents can do

There is no platform that can catch everything, especially when kids are curious. That makes parental involvement the most important layer of protection.

One reason this matters is a related risk worth acknowledging: adults attempting to reach children through social platforms. Even after Instagram took steps to limit contact between adult and child accounts, parents still discovered loopholes. This isn’t a failure of one platform so much as a reminder that no set of controls can replace awareness and involvement.

Mark Beare, GM of Consumer at Malwarebytes says:

“Parents are navigating a fast-moving digital world where offline consequences are quickly felt, be it spoofed accounts, deepfake content or lost funds. Safeguards exist and are encouraged, but children can still be exposed to harmful content.”

This doesn’t mean banning children from the internet. As the EFF points out, many minors use online services productively with the support and supervision of their parents. But it does mean being intentional about how accounts are set up, how children interact with others online, and how comfortable they feel asking for help.

Accounts and settings

- Use child or teen accounts where available, and avoid defaulting to adult accounts.

- Keep friends and followers lists set to private.

- Avoid using real names, birthdays, or other identifying details unless they are strictly required.

- Avoid facial recognition features for children’s accounts.

- For teens, be aware of “spam” or secondary accounts they’ve set up that may have looser settings.

Social behavior

- Talk to your child about who they interact with online and what kinds of conversations are appropriate.

- Warn them about strangers in comments, group chats, and direct messages.

- Encourage them to leave spaces that make them uncomfortable, even if they didn’t do anything wrong.

- Remind them that not everyone online is who they claim to be.

Trust and communication

- Keep conversations about online activity open and ongoing, not one-off warnings.

- Make it clear that your child can come to you if something goes wrong without fear of punishment or blame.

- Involve other trusted adults, such as parents, teachers, or caregivers, so kids aren’t navigating online spaces alone.

This kind of long-term involvement helps children make better decisions over time. It also reduces the risk that mistakes made today can follow them into the future, when personal information, images, or conversations could be reused in ways they never intended.

Research findings, scope and methodology

This research examined how children under the age of 13 may be exposed to sensitive content when browsing mainstream media and gaming services.

For this study, a “kid” was defined as an individual under 13, in line with the Children’s Online Privacy Protection Act (COPPA). Research was conducted between December 1 and December 17, 2025, using US-based accounts.

The research relied exclusively on standard user behavior and passive observation. No exploits, hacks, or manipulative techniques were used to force access to data or content.

Researchers tested a range of account types depending on what each platform offered, including dedicated child accounts, teen or restricted accounts, adult accounts created through age self-declaration, and, where applicable, public or guest access without registration.

The study assessed how platforms enforced age requirements, how easy it was to misrepresent age during onboarding, and whether sensitive or illicit content could be discovered through normal browsing, searching, or exploration.

Across all platforms tested, default algorithmic content and advertisements were initially benign and policy-compliant. Where sensitive content was found, it was accessed through intentional, curiosity-driven behavior rather than passive recommendations. No proactive outreach from other users was observed during the research period.

The table below summarizes the platforms tested, the account types used, and whether sensitive content was discoverable during testing.

| Platform | Account type tested | Dedicated kid/teen account | Age gate easy to bypass | Illicit content discovered | Notes |

|---|---|---|---|---|---|

| YouTube (public) | No registration (guest) | Yes (YouTube Kids) | N/A | Yes | Public YouTube allowed access to scam/fraud content and violent footage without sign-in. Age-restricted videos required login, but much content did not. |

| YouTube Kids | Kid account | Yes | N/A | No | Separate app with its own algorithmic wall. No harmful content surfaced. |

| Roblox | All-age account (13+) | No | Not required | Yes | Child accounts could search for and find communities linked to cybercrime and fraud-related keywords. |

| Teen account (13–17) | No | Not required | Yes | Restricted accounts still surfaced profiles promoting fraud and cryptocurrency schemes via search. | |

| TikTok | Younger user account (13+) | Yes | Not required | No | View-only experience with no free search. No harmful content surfaced. |

| TikTok | Adult account | No | Yes | Yes | Search surfaced credit card fraud–related profiles and tutorials after age gate bypass. |

| Discord | Adult account | No | Yes | Yes | Public servers surfaced explicit adult content when searched directly. No proactive contact observed. |

| Twitch | Adult account | No | Yes | Yes | Discovered escort service promotions and adult content, some behind paywalls. |

| Fortnite | Cabined (restricted) account (13+) | Yes | Hard to bypass | No | Chat and purchases disabled until parent verification. No harmful content found. |

| Snapchat | Adult account | No | Yes | No | No sensitive content surfaced during testing. |

| Spotify | Adult account | Yes | Yes | No | Explicit lyrics labeled. No harmful content found. |

| Messenger Kids | Kid account | Yes | Not required | No | Fully parent-controlled environment. No search or external contacts. |

Screenshots from the research

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.