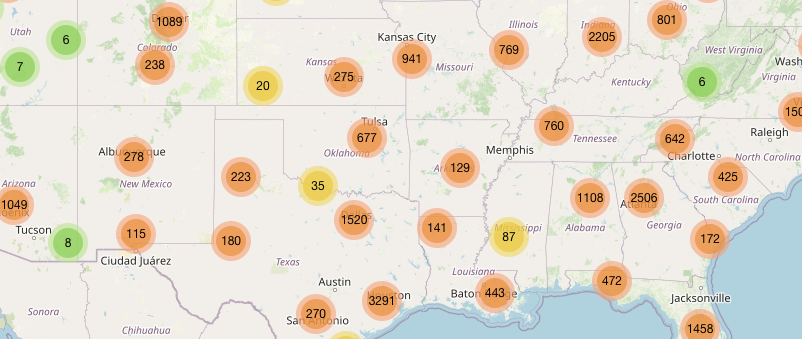

We’re seeing a surge in phishing calendar invites that users can’t delete, or that keep coming back because they sync across devices. The good news is you can remove them and block future spam by changing a few settings.

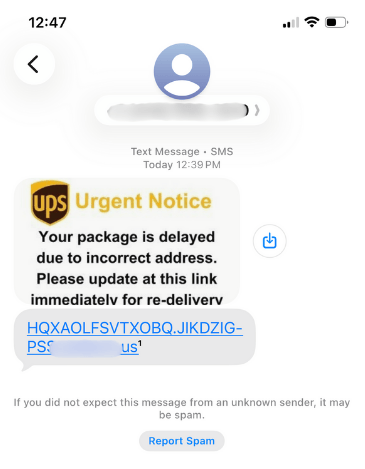

Most of these unwanted calendar entries are there for phishing purposes. Most of them warn you about a “impending payment” but the difference is in the subject and the action they want the target to take.

Sometimes they want you to call a number:

And sometimes they invite you to an actual meeting:

We haven’t followed up on these scams, but when attackers want you to call them or join a meeting, the end goal is almost always financial. They might use a tech support scam approach and ask you to install a Remote Monitoring and Management tool, sell you an overpriced product, or simply ask for your banking details.

The sources are usually distributed as email attachments or as download links in messaging apps.

How to remove fake entries from your calendar

This blog focuses on how to remove these unwanted entries. One of the obstacles is that calendars often sync across devices.

Outlook Calendar

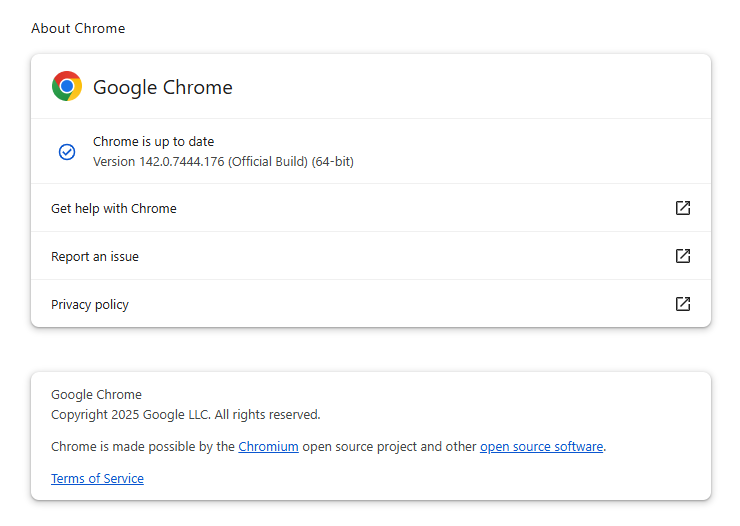

If you use Outlook:

- Delete without interacting: Avoid clicking any links or opening attachments in the invite. If available, use the “Do not send a response” option when deleting to prevent confirming that your email is active.

- Block the sender: Right-click the event and select the option to report the sender as junk or spam to help prevent future invites from that email address.

- Adjust calendar settings: Access your Outlook settings and disable the option to automatically add events from email. This setting matters because even if the invite lands in your spam folder, auto-adding invites will still put the event on your calendar.

- Report the invite: Report the spam invitation to Microsoft as phishing or junk.

- Verify billing issues through official channels: If you have concerns about your account, go directly to the company’s official website or support, not the information in the invite.

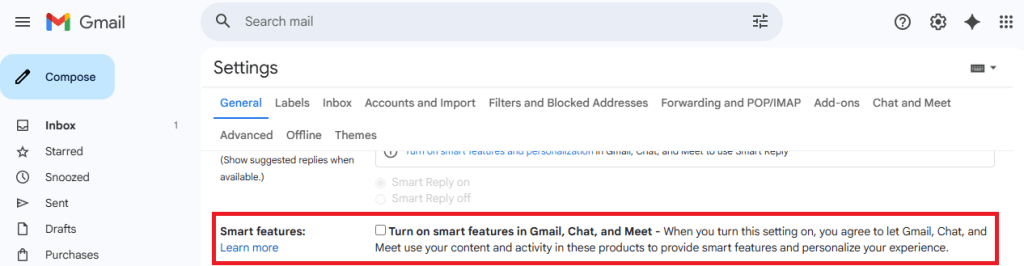

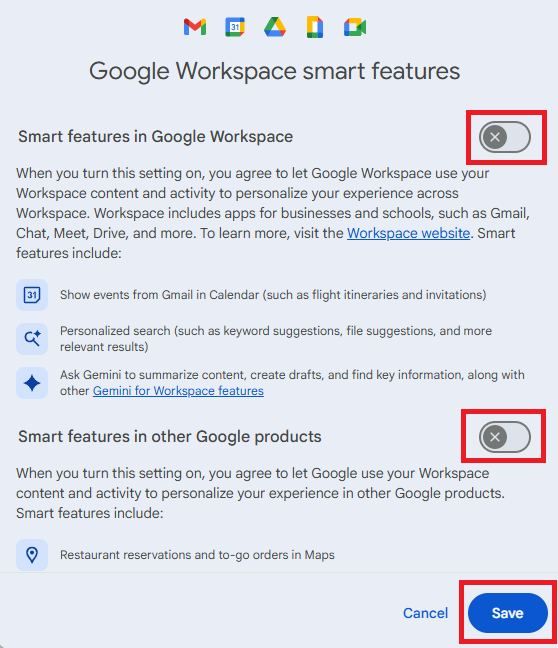

Gmail Calendar

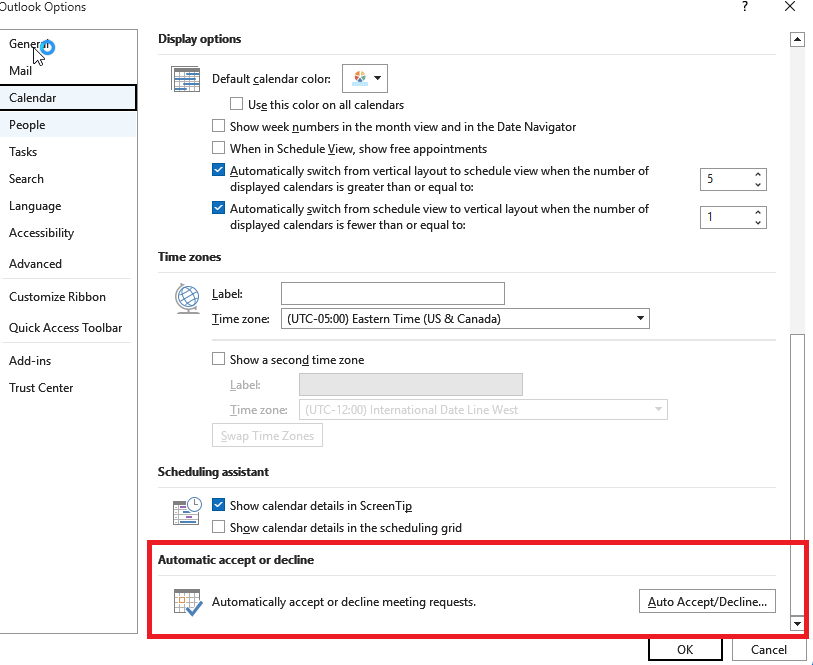

To disable automatic calendar additions:

- Open Google Calendar.

- Click the gear icon and select Settings in the upper right part of the screen.

- Under Event settings, change Add invitations to my calendar to either Only if the sender is known or When I respond to the invitation email. (The default setting is From everyone, which will add any invite to your calendar.)

- Uncheck Show events automatically created by Gmail if you want to stop Gmail from adding to your calendar on its own.

Android Calendar

To prevent unknown senders from adding invites:

- Open the Calendar app.

- Tap Menu > Settings.

- Tap General > Adding invitations > Add invitations to my calendar.

- Select Only if the sender is known.

For help reviewing which apps have access to your Android Calendar, refer to the support page.

Mac Calendars

To control how events get added to your Calendar on a Mac:

- Go to Apple menu > System Settings > Privacy & Security.

- Click Calendars.

- Turn calendar access on or off for each app in the list.

- If you allow access, click Options to choose whether the app has full access or can only add events.

iPhone and iPad Calendar

The controls are similar to macOS, but you may also want to remove additional calendars:

- Open Settings.

- Tap Calendar > Accounts > Subscribed Calendars.

- Select any unwanted calendars and tap the Delete Account option.

Additional calendars

Which brings me to my next point. Check both the Outlook Calendar and the mobile Calendar app for Additional Calendars or subscribed URLs and Delete/Unsubscribe. This will stop the attacker from being able to add even more events to your Calendar. And looking in both places will be helpful in case of synchronization issues.

Several victims reported that after removing an event, they just came back. This is almost always due to synchronization. Make sure you remove the unwanted calendar or event everywhere it exists.

Tracking down the source can be tricky, but it may help prevent the next wave of calendar spam.

How to prevent calendar spam

We’ve covered some of this already, but the main precautions are:

- Turn off auto‑add or auto‑processing so invites stay as emails until you accept them.

- Restrict calendar permissions so only trusted people and apps can add events.

- In shared or resource calendars, remove public or anonymous access and limit who can create or edit items.

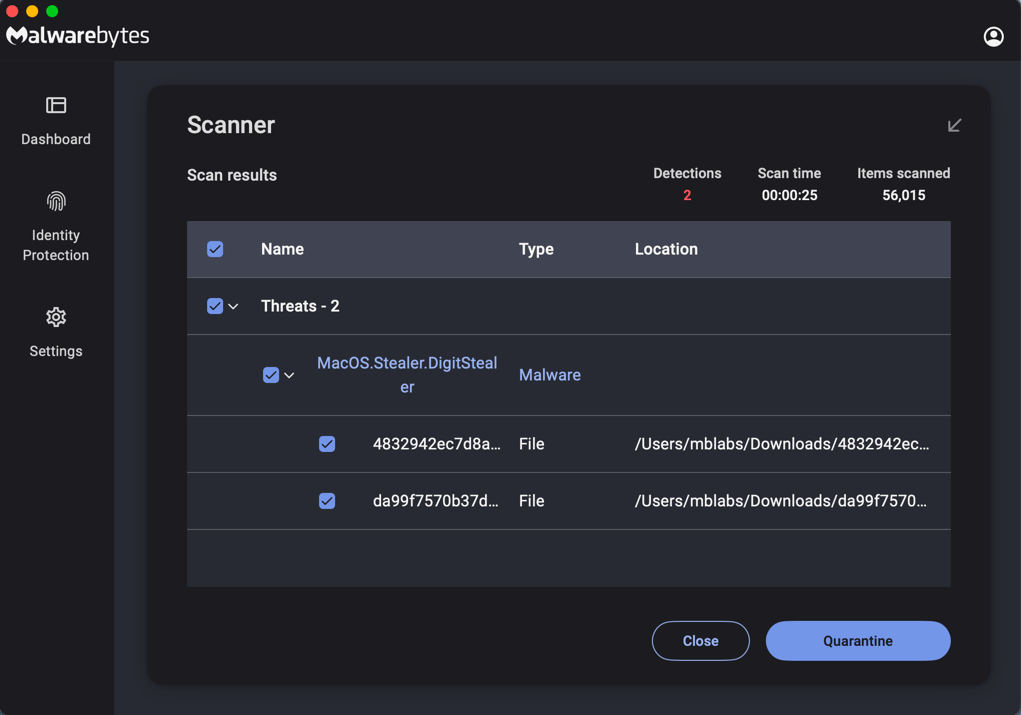

- Use an up-to-date real-time anti-malware solution with a web protection component to block known malicious domains.

- Don’t engage with unsolicited events. Don’t click links, open attachments, or reply to suspicious calendar events such as “investment,” “invoice,” “bonus payout,” “urgent meeting”—just delete the event.

- Enable multi-factor authentication (MFA) on your accounts so attackers who compromise credentials can’t abuse the account itself to send or auto‑accept invitations.

Pro tip: If you’re not sure whether an event is a scam, you can feed the message to Malwarebytes Scam Guard. It’ll help you decide what to do next.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.