WhatsApp is quietly rolling out a new safety layer for photos, videos, and documents, and it lives entirely under the hood. It won’t change how you chat, but it will change what happens to the files that move through your chats—especially the kind that can hide malware.

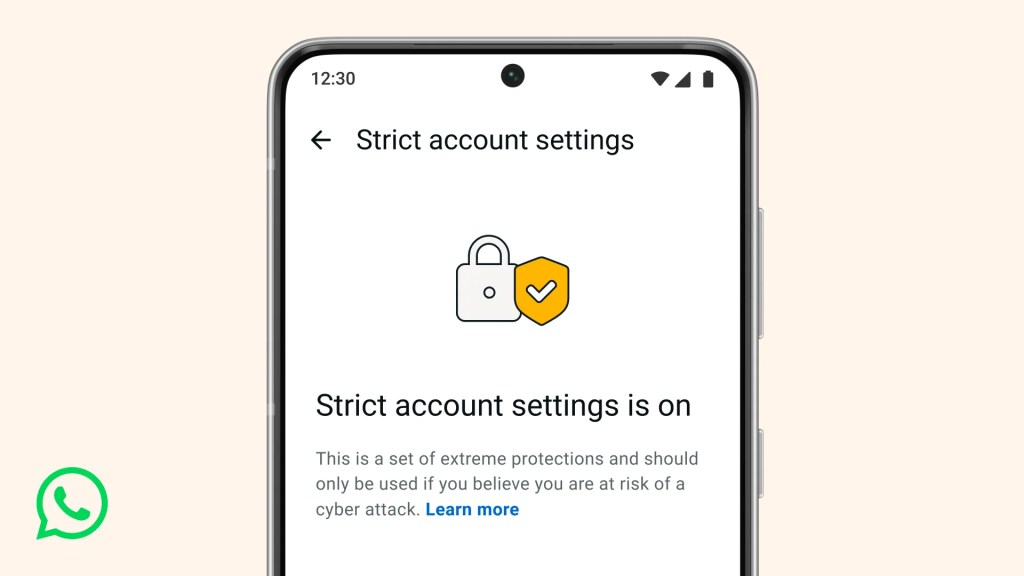

The new feature, called Strict Account Settings, is rolling out gradually over the coming weeks. To see whether you have the option—and to enable it—go to Settings > Privacy > Advanced.

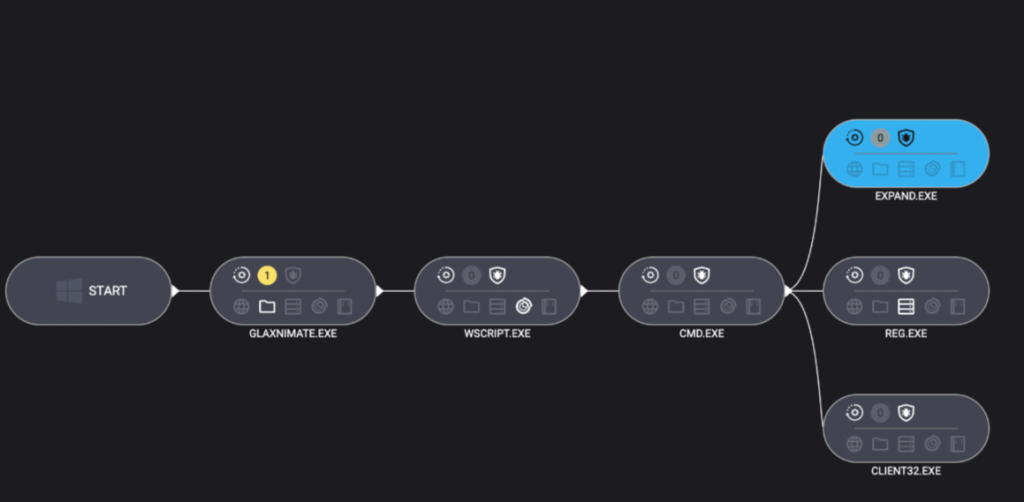

Yesterday, we wrote about a WhatsApp bug on Android that made headlines because a malicious media file in a group chat could be downloaded and used as an attack vector without you tapping anything. You only had to be added to a new group to be exposed to the booby-trapped file. That issue highlighted something security folks have worried about for years: media files are a great vehicle for attacks, and they do not always exploit WhatsApp itself, but bugs in the operating system or its media libraries.

In Meta’s explanation of the new technology, it points back to the 2015 Stagefright Android vulnerability, where simply processing a malicious video could compromise a device. Back then, WhatsApp worked around the issue by teaching its media library to spot broken MP4 files that could trigger those OS bugs, buying users protection even if their phones were not fully patched.

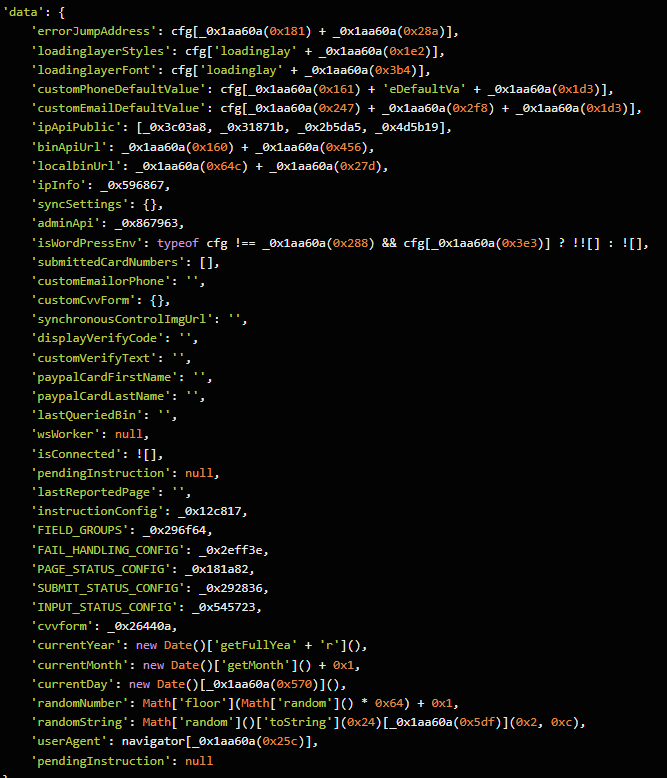

What’s new is that WhatsApp has now rebuilt its core media-handling library in Rust, a memory-safe programming language. This helps eliminate several types of memory bugs that often lead to serious security problems. In the process, it replaced about 160,000 lines of older C++ code with roughly 90,000 lines of Rust, and rolled the new library out to billions of devices across Android, iOS, desktop apps, wearables, and the web.

On top of that, WhatsApp has bundled a series of checks into an internal system it calls “Kaleidoscope.” This system inspects incoming files for structural oddities, flags higher‑risk formats like PDFs with embedded content or scripts, detects when a file pretends to be something it’s not (for example, a renamed executable), and marks known dangerous file types for special handling in the app. It won’t catch every attack, but it should prevent malicious files from poking at more fragile parts of your device.

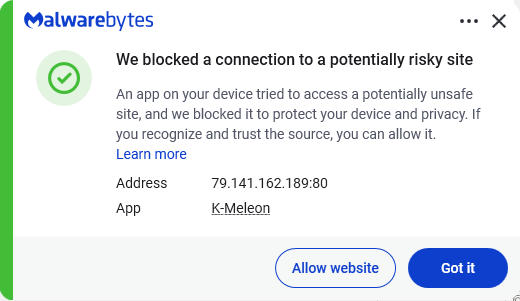

For everyday users, the Rust rebuilt and Kaleidoscope checks are good news. They add a strong, invisible safety net around photos, videos and other files you receive, including in group chats where the recent bug could be abused. They also line up neatly with our earlier advice to turn off automatic media downloads or use Advanced Privacy Mode, which limits how far a malicious file can travel on your device even if it lands in WhatsApp.

WhatsApp is the latest platform to roll out enhanced protections for users: Apple introduced Lockdown Mode in 2022, and Android followed with Advanced Protection Mode last year. WhatsApp’s new Strict Account Settings takes a similar high-level approach, applying more restrictive defaults within the app, including blocking attachments and media from unknown senders.

However, this is no reason to rush back to WhatsApp, or to treat these changes as a guarantee of safety. At the very least, Meta is showing that it is willing to invest in making WhatsApp more secure.

We don’t just report on phone security—we provide it

Cybersecurity risks should never spread beyond a headline. Keep threats off your mobile devices by downloading Malwarebytes for iOS, and Malwarebytes for Android today.