Short answer: we have no idea.

People are actively complaining that their mailboxes and queues are being flooded by emails coming from the Zendesk instances of trusted companies like Discord, Riot Games, Dropbox, and many others.

Zendesk is a customer service and support software platform that helps companies manage customer communication. It supports tickets, live chat, email, phone, and communication through social media.

Some people complained about receiving over 1,000 such emails. The strange thing ais that so far there are no reports of malicious links, tech support scam numbers, or any type of phishing in these emails.

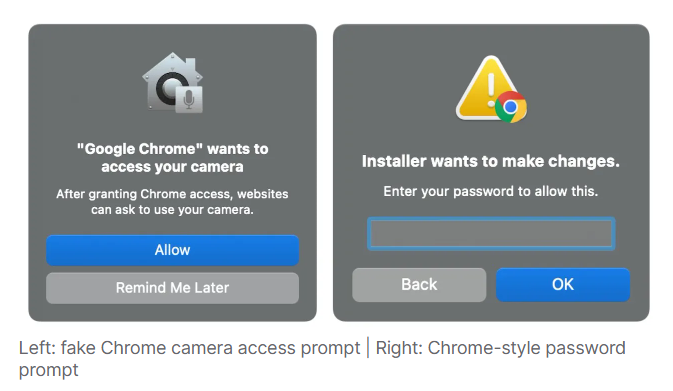

The abusers are able to send waves of emails from these systems because Zendesk allows them to create fake support tickets with email addresses that do not belong to them. The system sends a confirmation mail to the provided email address if the affected company has not restricted ticket submission to verified users.

In a December advisory, Zendesk warned about this method, which they called relay spam. In essence it’s an example of attackers abusing a legitimate automated part of a process. We have seen similar attacks before, but they always served a clear purpose for the attacker, whereas this one doesn’t.

Even though some of the titles in use definitely are of a clickbait nature. Some examples:

- FREE DISCORD NITRO!!

- TAKE DOWN ORDER NOW FROM CD Projekt

- TAKE DOWN NOW ORDER FROM Israel FOR Square Enix

- DONATION FOR State Of Tennessee CONFIRMED

- LEGAL NOTICE FROM State Of Louisiana FOR Electronic

- IMPORTANT LAW ENFORCEMENT NOTIFICATION FROM DISCORD FROM Peru

- Thank you for your purchase!

- Binance Sign-in attempt from Romania

- LEGAL DEMAND from Take-Two interactive

So, this could be someone testing the system, but it just as well might be someone who enjoys disrupting the system and creating disruption. Maybe they have an axe to grind with Zendesk. Or they’re looking for a way to send attachments with the emails.

Either way, Zendesk told BleepingComputer that they introduced new safety features on their end to detect and stop this type of spam in the future. But companies are advised to restrict the users that can submit tickets and the titles submitters can give to the tickets.

Stay vigilant

In the emails we have seen the links in the tickets are legitimate and point to the affected company’s ticket system. And the only part of the emails the attackers should be able to manipulate is the title and subject of the ticket.

But although everyone involved tells us just to ignore the emails, it is never wrong to handle them with an appropriate amount of distrust.

- Delete or archive the emails without interacting.

- Do not click on the links if you have not submitted the ticket or call any telephone number mentioned in the ticket. Reach out through verified channels.

- Ignore any actions advised in the parts of the email the ticket submitter can control.

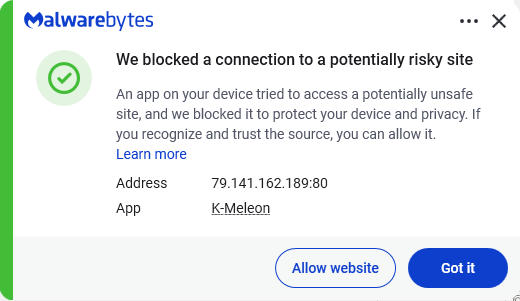

We don’t just report on threats – we help protect your social media

Cybersecurity risks should never spread beyond a headline. Protect your social media accounts by using Malwarebytes Identity Theft Protection.