It’s getting harder to keep your Windows space truly yours, as Microsoft increasingly serves annoying ads and tracks your data across third-party apps.

Pushing back against your eroding privacy has been a scattered and sometimes complicated process… but we’re making it easier for you. With the latest version of Malwarebytes for Windows, we’ve introduced Privacy Controls—a simple screen that brings several privacy settings together in one place, so you can easily decide how Microsoft handles your data.

With four simple toggles, you can decide whether to:

- Allow third-party apps to use your Advertising ID

- Allow third-party content on your lock screen

- Allow third-party content on your Start screen

- Allow Microsoft to use Windows diagnostic data

You can also disable all privacy-impacting features at once.

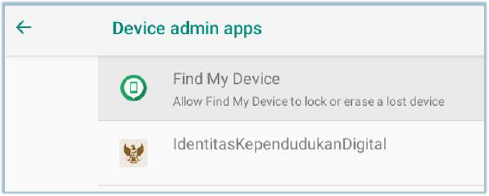

There’s more good news for your privacy. Malwarebytes now also alerts you when “Remote Desktop Programs” are installed on your device.

Remote Desktop Programs are powerful, often legitimate tools used by IT teams and tech support to fix problems remotely—especially since remote work became common. But the remote access these programs provide is powerful, which makes them a target for cybercriminals. If a real tech support account is compromised, a hacker could use the remote desktop program to tamper with your devices or spy on sensitive information.

There’s also a type of scam—called a tech support scam—where criminals trick people into installing remote desktop programs so they can take control of the victim’s device, potentially stealing data or money down the line.

By flagging these programs, Malwarebytes gives you more visibility into what’s on your computer, so you can stay in control of your privacy and security.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.