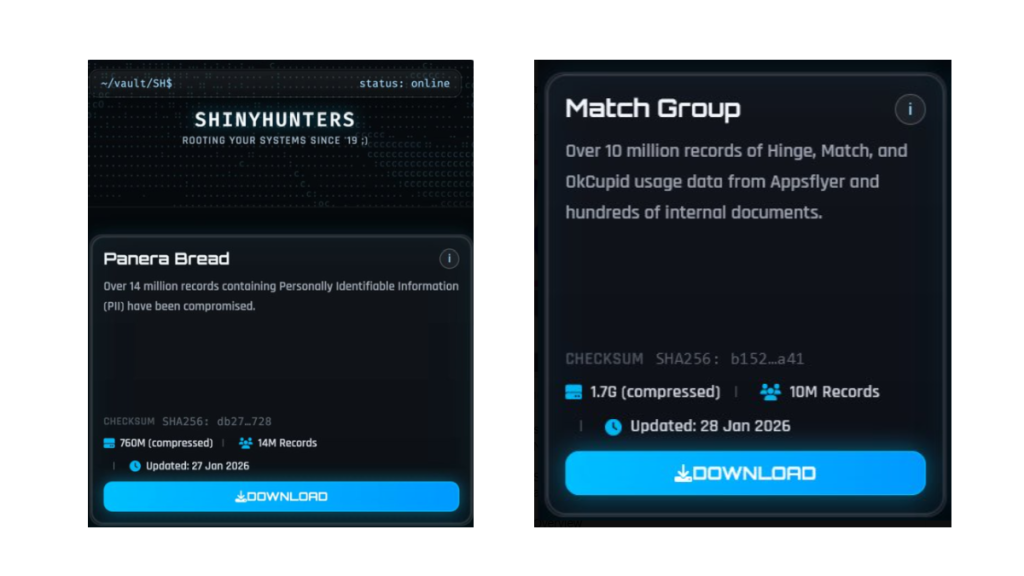

The ShinyHunters ransomware group has claimed the theft of data containing 10 million records belonging to the Match Group and 14 million records from bakery-café chain Panera Bread.

The Match Group, that runs multiple popular online dating services like Tinder, Match.com, Meetic, OkCupid, and Hinge has confirmed a cyber incident and is investigating the data breach.

Panera Bread also confirmed that an incident occurred and has alerted authorities. “The data involved is contact information,” it said in an emailed statement to Reuters.

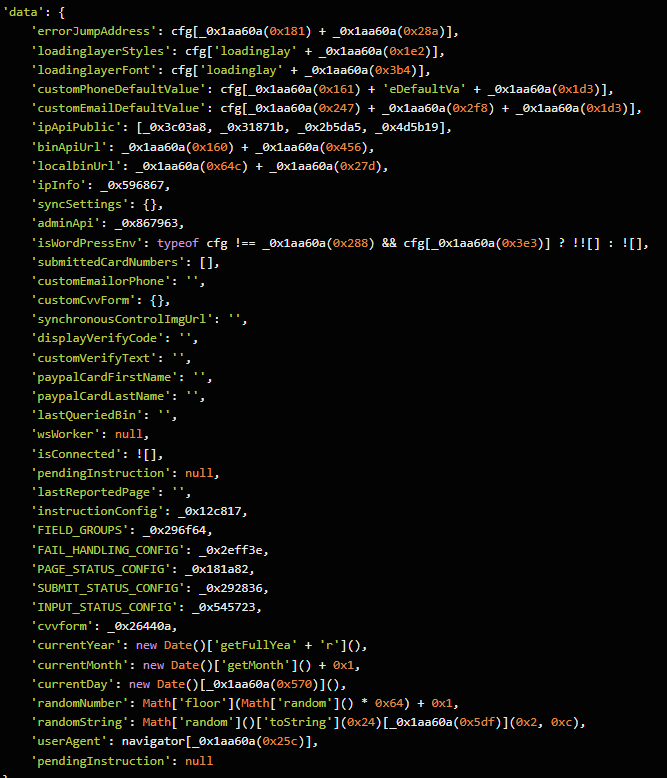

ShinyHunters seems to be gaining access through Single-Sign-On (SSO) platforms and using voice-cloning techniques, which has resulted in a growing number of breaches across different companies. However, not all of these breaches have the same impact.

The impact

For the Match Group, ShinyHunters claims:

“Over 10 million records of Hinge, Match, and OkCupid usage data from Appsflyer and hundreds of internal documents.”

Match says there is no evidence that logins, financial data, or private chats were stolen, but Personally Identifiable Information (PII) and tracking data for some users are in scope. A notification process has been set in motion.

For Panera Bread, ShinyHunters claims to have compromised 14 million records containing PII.

Panera Bread reassures users that there is no indication that the hackers accessed user login credentials, financial information, or private communications.

ShinyHunters also breached Bumblr, Carmax, and Edmunds among others, but I wanted to use Panera Bread and the Match Group as two examples that have very different consequences for users.

When your activity on a dating app is compromised, the impact can be deeply personal. Concerns can range from partners, family members, or employers discovering dating profiles to the risk of doxxing. For many people, stigma around certain apps can lead to fears of being outed, accused of infidelity, or even extorted.

The impact of the Panera Bread breach will be very different. “I just ordered a sandwich and now some criminals have my home address?” Data like this is useful to enrich existing data sets. And the more they know, the easier and better they can target you in phishing attempts.

Protecting yourself after a data breach

If you think you have been affected by a data breach, here are steps you can take to protect yourself:

- Check the company’s advice. Every breach is different, so check with the company to find out what’s happened and follow any specific advice it offers.

- Change your password. You can make a stolen password useless to thieves by changing it. Choose a strong password that you don’t use for anything else. Better yet, let a password manager choose one for you.

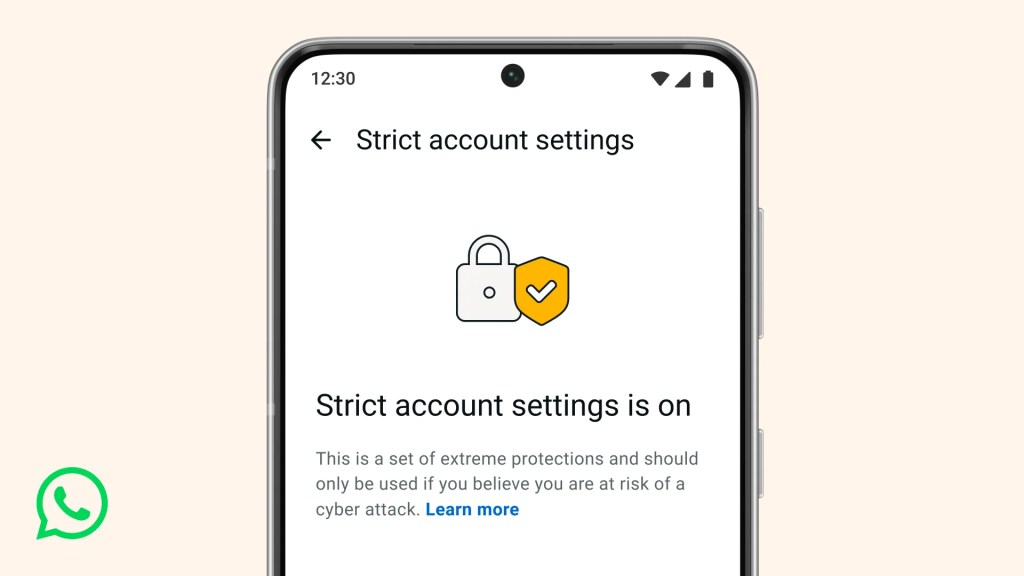

- Enable two-factor authentication (2FA). If you can, use a FIDO2-compliant hardware key, laptop, or phone as your second factor. Some forms of 2FA can be phished just as easily as a password, but 2FA that relies on a FIDO2 device can’t be phished.

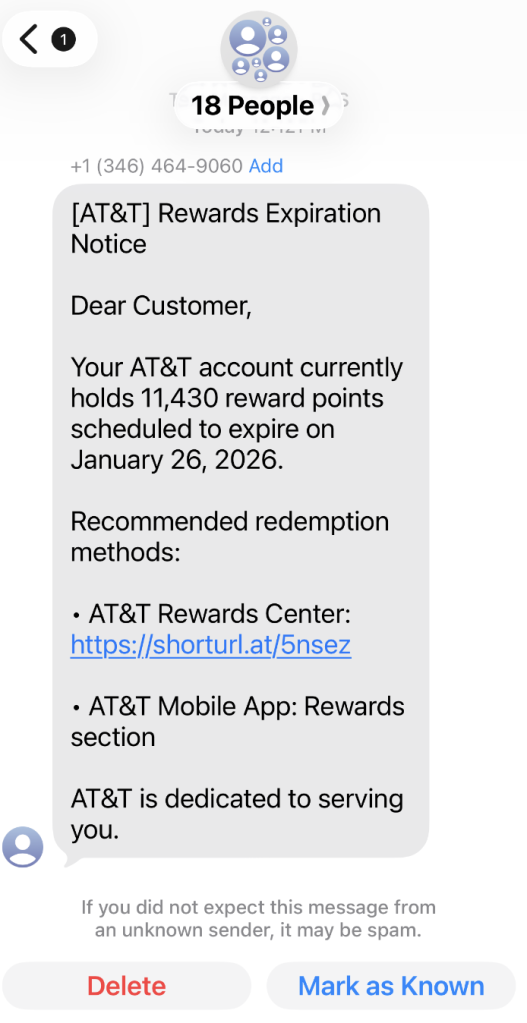

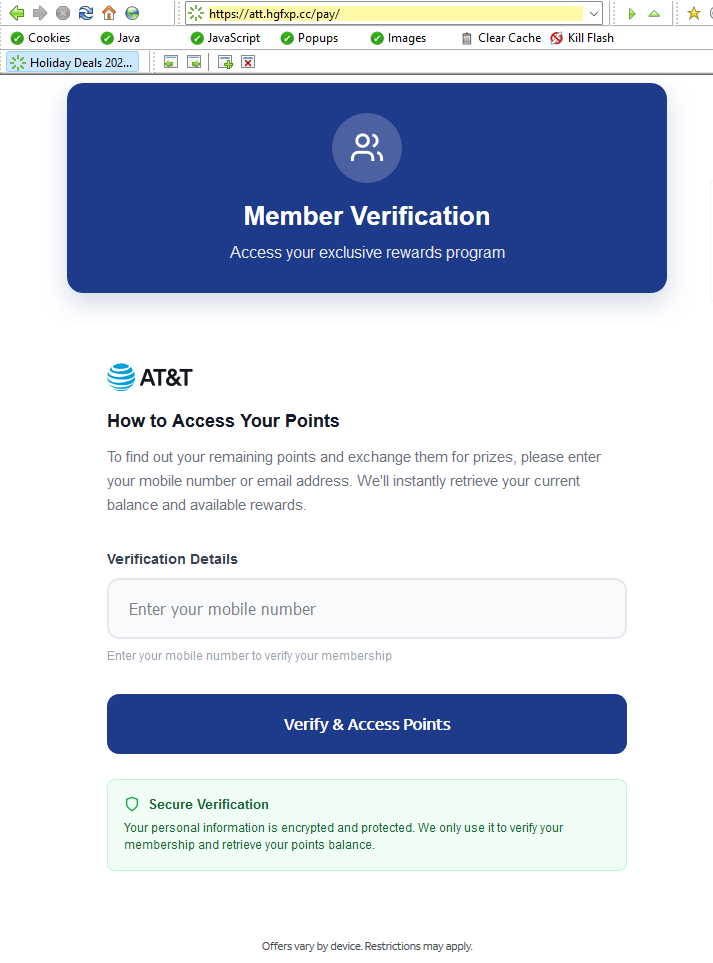

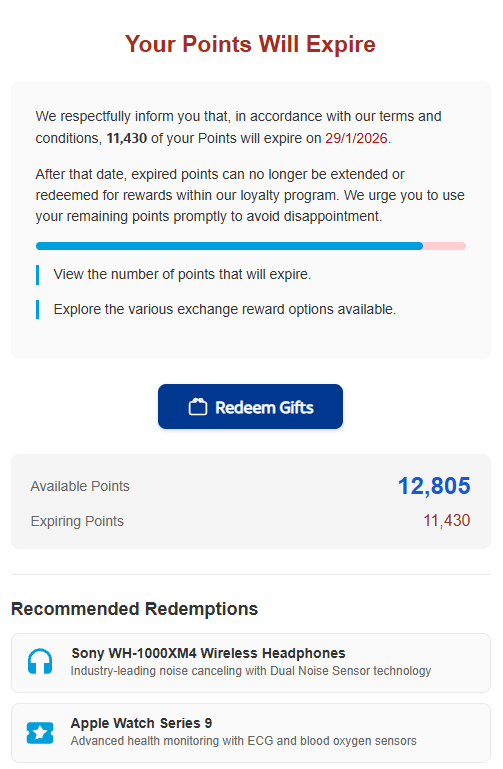

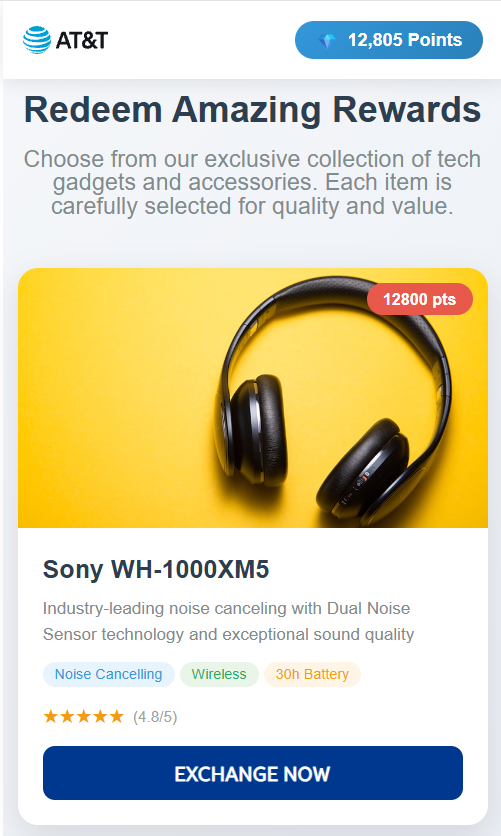

- Watch out for impersonators. The thieves may contact you posing as the breached platform. Check the official website to see if it’s contacting victims and verify the identity of anyone who contacts you using a different communication channel.

- Take your time. Phishing attacks often impersonate people or brands you know, and use themes that require urgent attention, such as missed deliveries, account suspensions, and security alerts.

- Consider not storing your card details. It’s definitely more convenient to let sites remember your card details, but it increases risk if a retailer suffers a breach.

- Set up identity monitoring, which alerts you if your personal information is found being traded illegally online and helps you recover after.

You can use Malwarebytes’ free Digital Footprint scan to find out if your private information is exposed online.

We don’t just report on threats—we help safeguard your entire digital identity

Cybersecurity risks should never spread beyond a headline. Protect your, and your family’s, personal information by using identity protection.