Loyal readers and other privacy-conscious people will be familiar with the expression, “If it’s too good to be true, it’s probably false.”

Getting paid handsomely to scroll social media definitely falls into that category. It sounds like an easy side hustle, which usually means there’s a catch.

In January 2026, an app called Freecash shot up to the number two spot on Apple’s free iOS chart in the US, helped along by TikTok ads that look a lot like job offers from TikTok itself. The ads promised up to $35 an hour to watch your “For You” page. According to reporting, the ads didn’t promote Freecash by name. Instead, they showed a young woman expressing excitement about seemingly being “hired by TikTok” to watch videos for money.

The landing pages featured TikTok and Freecash logos and invited users to “get paid to scroll” and “cash out instantly,” implying a simple exchange of time for money.

Those claims were misleading enough that TikTok said the ads violated its rules on financial misrepresentation and removed some of them.

Once you install the app, the promised TikTok paycheck vanishes. Instead, Freecash routes you to a rotating roster of mobile games—titles like Monopoly Go and Disney Solitaire—and offers cash rewards for completing time‑limited in‑game challenges. Payouts range from a single cent for a few minutes of daily play up to triple‑digit amounts if you reach high levels within a fixed period.

The whole setup is designed not to reward scrolling, as it claims, but to funnel you into games where you are likely to spend money or watch paid advertisements.

Freecash’s parent company, Berlin‑based Almedia, openly describes the platform as a way to match mobile game developers with users who are likely to install and spend. The company’s CEO has spoken publicly about using past spending data to steer users toward the genres where they’re most “valuable” to advertisers.

Our concern, beyond the bait-and-switch, is the privacy issue. Freecash’s privacy policy allows the automatic collection of highly sensitive information, including data about race, religion, sex life, sexual orientation, health, and biometrics. Each additional mobile game you install to chase rewards adds its own privacy policy, tracking, and telemetry. Together, they greatly increase how much behavioral data these companies can harvest about a user.

Experts warn that data brokers already trade lists of people likely to be more susceptible to scams or compulsive online behavior—profiles that apps like this can help refine.

We’ve previously reported on data brokers that used games and apps to build massive databases, only to later suffer breaches exposing all that data.

When asked about the ads, Freecash said the most misleading TikTok promotions were created by third-party affiliates, not by the company itself. Which is quite possible because Freecash does offer an affiliate payout program to people who promote the app online. But they made promises to review and tighten partner monitoring.

For experienced users, the pattern should feel familiar: eye‑catching promises of easy money, a bait‑and‑switch into something that takes more time and effort than advertised, and a business model that suddenly makes sense when you realize your attention and data are the real products.

How to stay private

Free cash? Apparently, there is no such thing.

If you’re curious how intrusive schemes like this can be, consider using a separate email address created specifically for testing. Avoid sharing real personal details. Many users report that once they sign up, marketing emails quickly pile up.

Some of these schemes also appeal to people who are younger or under financial pressure, offering tiny payouts while generating far more value for advertisers and app developers.

So, what can you do?

- Gather information about the company you’re about to give your data. Talk to friends and relatives about your plans. Shared common sense often helps make the right decisions.

- Create a separate account if you want to test a service. Use a dedicated email address and avoid sharing real personal details.

- Limit information you provide online to what makes sense for the purpose. Does a game publisher need your Social Security Number? I don’t think so.

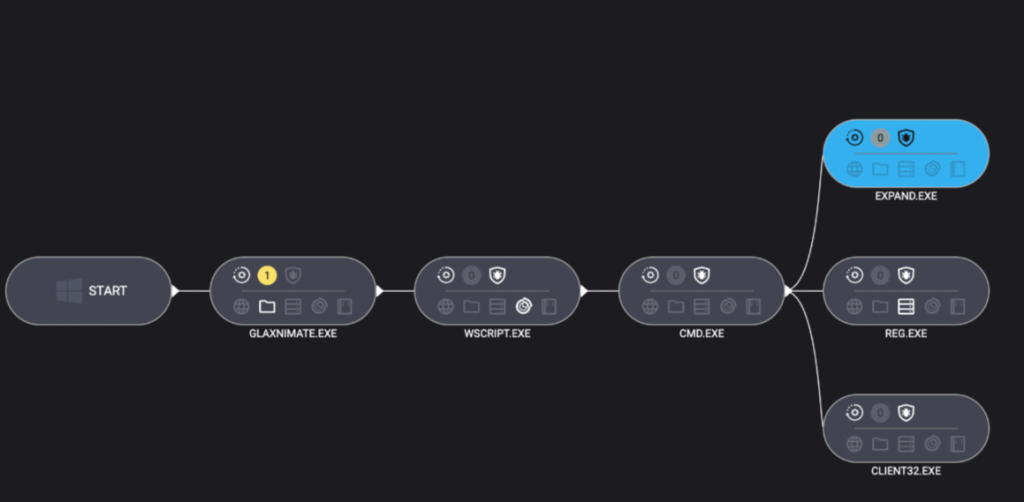

- Be cautious about app installs that are framed as required to make the money initially promised, and review permissions carefully.

- Use an up-to-date real-time anti-malware solution on all your devices.

Work from the premise that free money does not exist. Try to work out the business model of those offering it, and then decide.

We don’t just report on threats – we help protect your social media

Cybersecurity risks should never spread beyond a headline. Protect your social media accounts by using Malwarebytes Identity Theft Protection.